Best Practices for Microservices Architecture

Transitioning from a monolithic to a microservices architecture is a strategic move embraced by many enterprises, but it’s far from straightforward — especially for large-scale apps.

During development, you need to decouple the system in a way to ensure your services aren’t too small or too large, too coupled, or too complex. In fact, “too much” is what you should better avoid. If you don’t keep these factors in check, you could end up with a tangled mess of problems that hides scalability issues and drags down performance — the very things microservices architecture is supposed to improve.

This is why implementing microservices requires professional microservices development services. Or at least solid guidance from an experienced partner. Juggling these complexities daily in our client projects, our team at Expert Soft is an old hand with the architectural approach and can provide both — development services and advice.

But today, we’re focusing on the latter, offering 10 best practices of microservices architecture implementation that will set you on the right path. This article is a collaborative work by 3 engineers from the Expert Soft team, covering insights and best practices from years of their experience in diverse applications.

10 Best Practices for Microservices Development

What are the best practices to design microservices architecture? Well, that depends on who you ask. Is it a developer, an architect, or a project manager? Each perspective can bring a different angle to the table depending on practice and expertise.

But since we’re developers, during 15 minutes we created a list of 10 best practices focused on microservices design and development:

- Single Responsibility Principle

- Separate data spaces

- Separate authentication

- API gateway

- No hard-coding values

- CI/CD, containerization, orchestration

- Independent deployment

- Fixed APIs

- Defined technologies

- Microservices templates

Now, let’s analyze each best practice for microservices development in more detail.

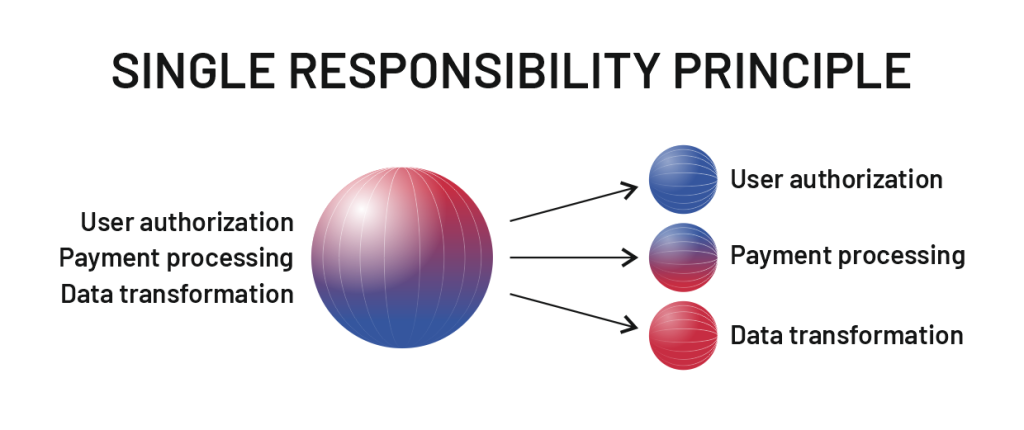

Adhere to a Single Responsibility Principle

If there’s one thing you need to know about the best practices to design microservices, it’s the importance of the Single Responsibility Principle (SRP). Rooted in object-oriented programming, SRP states that each microservice should focus on only one function.

To identify boundaries of microservices, you might follow either “Business Capability” or “Domain Context” (domain-driven design or DDD) approach. In the first case, each microservice should cover a single, independent part of business logic. In the second case, you should define subdomain boundaries, allowing each microservice to cover a single context, without intersection with other microservices.

From the initial architecture planning to the development and final app deployment, SRP should be your guiding star. For example, having distinct services for user authorization, payment processing, and data transformation ensures clarity and reduces complexity. Each service does its task without stepping on the other’s toes.

In contrast, combining multiple functions into a single service, such as a search service that also handles user authentication and payments, complicates development and management and increases risks. This approach can create bulky services that undermine the benefits of microservices architecture, such as scaling and fault isolation. If a multi-functional service fails or requires maintenance, it can disrupt large parts of your web application.

And the last thing to mention before moving to the next best practice. SRP enhances team autonomy, reducing the likelihood of code conflicts when multiple developers work on intersecting parts of the solution architecture. By keeping services focused, teams can work more independently and efficiently.

Use separate data spaces for each microservice

This is the second best practice we want you to remember for microservices architecture.

To avoid data inconsistencies and chaos, each microservice should have its distinct data space for modifications. This doesn’t mean running a separate database instance for each service. Instead, it involves logically separating the data within a shared database system.

For example, you might use a single MongoDB or MySQL server where each microservice has access to its specific collection. Obviously, several services can read shared information, but writing or modifying data in a collection should be the responsibility of just one service. This reduces complexity and potential conflicts, especially when different teams make changes in one microservice.

When multiple microservices write to the same database, it complicates data migration and structural changes within architecture. Teams may need extra coordination, leading to potential issues and risking data loss if changes aren’t carefully synchronized.

While this rule is important, in practice, sometimes it can be bent due to project specifics. For instance, two services might need to write to the same database if they belong to different departments or offices.

However, we recommend experimenting only in exceptional cases and carefully evaluating the consequences of your choice, considering the additional resources needed to keep the platform resilient and effective.

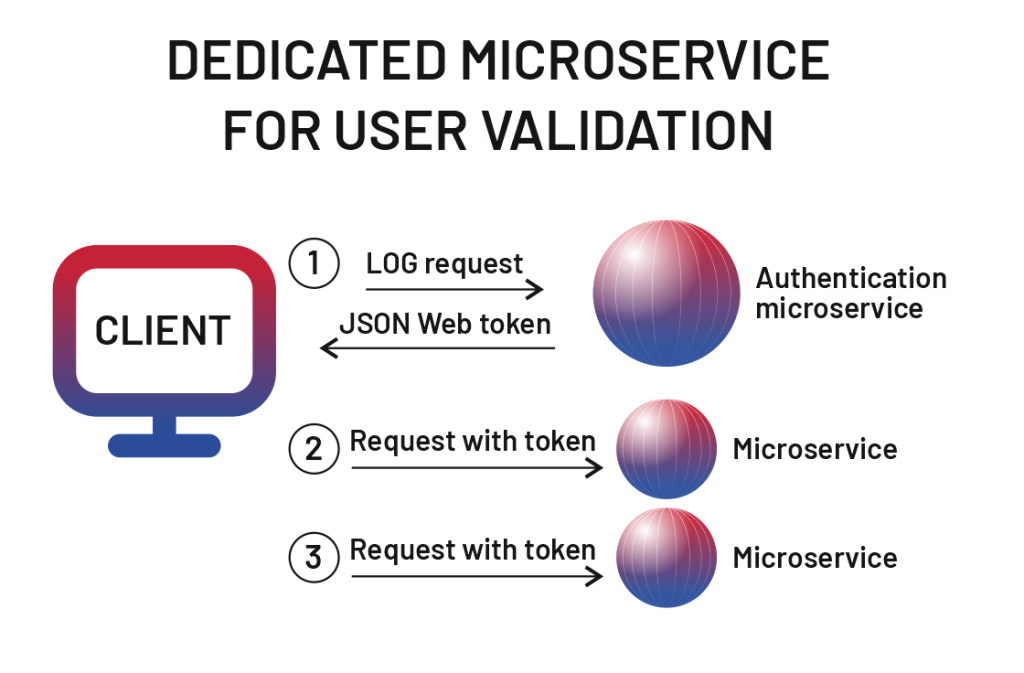

Assign a dedicated microservice for user validation

With distributed access to data, you need to establish protection and ensure that specific information is available only for trusted users, which requires proper user validation. For this, we recommend the development of a dedicated authentication microservice as a good practice in your microservices architecture.

Here’s how it typically works. When a user logs in, their request is sent to the authorization microservice, which verifies a user and assigns a JSON Web Token (JWT). This token is then used for all subsequent requests to other microservices, ensuring that each interaction is secure and authorized.

This approach significantly reduces the likelihood of unauthorized access as every request must present a valid token, with all interactions being recorded through detailed logging. Internal services in your architecture that don’t interact with external clients might not need token verification, but any client-facing service should strictly follow this method to maintain the strong security of the app.

Beyond enhancing protection, this approach also boosts solution performance. Instead of each microservice running its own validation logic for every user, they only deal with approved tokens, which speeds up processing and reduces operational overhead.

For advanced protection within the microservices architecture, you can also incorporate OAuth (open authorization), which allows users to grant limited access to their data in an app without exposing their credentials during transactions.

By integrating OAuth, microservices can effectively manage user permissions and secure transactions, ensuring that only authorized users can perform certain actions. This approach also supports robust logging and monitoring capabilities, providing insights into user activities over time.

Use the API Gateway

The centralized API Gateway acts as a single entry point for all client requests, efficiently routing them to the appropriate microservices within the architecture and monitoring interactions. The API Gateway uses HTTP request-response protocol to manage and route client requests to the appropriate microservices, ensuring efficient and secure communication across the system.

For example, when a front-end application needs to authenticate users or perform searches, it sends requests to the API Gateway. The Gateway then takes charge and routes these requests to the relevant microservices. This setup abstracts the complexity of managing multiple microservice endpoints and enhances security.

It’s beneficial in several ways:

-

Simplified communication

Clients no longer need to worry about the specific locations of each microservice in the architecture. Instead, they communicate with the Gateway using consistent endpoints. This makes interactions seamless and straightforward.

-

Reduced coupling

By isolating clients from the details of microservices, any changes to individual services, such as address or port modifications, won’t disrupt the client's application. The Gateway manages all these changes, allowing clients to keep communicating with the Gateway as if nothing has changed.

-

Improved system resilience

The less the client knows about microservices, the less likely it is that failures in one service will cause issues on the client side.

As a version of the API Gateway pattern, you can also consider Back-end for Front-end (BFF) here. The BFF approach involves creating a dedicated back-end service for each front-end interface, which tailors the data and interactions to the specific domain and user experience.

It changes the communication pattern and instead of a single server-side API, different client types call to dedicated gateways. By creating specialized APIs that meet the needs of each client in this way, you can get rid of the unnecessary overhead caused by storing it all in one place.

Use config files instead of hard-coding values

Hard-coding values, such as service addresses, database URLs, or other variables directly into your code, can lead to headaches down the line. When these values need to change, you’re stuck modifying the code and redeploying the service, which is both time-consuming and prone to errors.

Using configuration files is an effective microservices best practice to separate these variable elements from your codebase. This separation means that when changes occur, for example, updating a database address, you simply tweak the configuration file rather than diving into the codebase and redeploying the entire service. It’s faster, cleaner, and a whole lot less error-prone than altering hard-coded values.

And the benefits don’t stop there:

-

On-the-fly adjustments

For some services, it’s possible to implement mechanisms that periodically check configuration files. This allows changes to be detected and applied without restarting the service. While not always feasible for every service due to compilation needs, those that support it can benefit from this dynamic approach.

-

Automated deployment pipelines

Using configuration files aligns well with CI/CD pipelines, allowing for automated updates and streamlined monitoring. The service can periodically check for new configurations and apply them seamlessly, reducing downtime and manual intervention.

Benefit from CI/CD, containerization, and orchestration

This is especially true for high-load enterprise projects. If you have a basic platform with relatively small numbers of microservices, using these microservices DevOps best practices might unnecessarily complicate the deployment process.

Established CI/CD pipelines, supported by powerful tools, automate the build, testing, and deployment processes, ensuring rapid and reliable software delivery. It includes containerization and orchestration techniques that streamline the entire workflow.

Containerization involves using tools like Docker to package microservices with all their dependencies into lightweight, portable containers. This data consistency ensures that microservices run reliably, no matter where they are deployed.

Cloud platforms, such as AWS, Azure, and Google Cloud, offer robust services for container orchestration and CI/CD pipelines, enabling seamless deployment and management of microservices across distributed environments.

Orchestration is the next step, which manages these containers, including service discovery, failure management, and scaling. Kubernetes, a leading orchestration platform, uses pods as the basic building blocks, each containing one or more containers that encapsulate microservices and their resources. Juggling pods, the tool automates the deployment, scaling, and monitoring of containerized applications,

For example, Kubernetes can facilitate the scaling of services as the load grows.

The well-established DevOps model not only simplifies microservices architecture management and deployment but also monitoring and observability. It allows businesses to diagnose issues faster and simplify troubleshooting, increasing platform reliability and reducing downtime.

After all, the CI/CD, containerization, and orchestration trio enable efficient software delivery, consistent environments, and effective monitoring, making them indispensable for large-scale microservices architecture implementation.

Deploy each microservice independently

As microservices are independent parts of the system, you can greatly benefit from deploying them separately. This approach allows teams to deploy changes as needed, offering greater flexibility and control over the deployment process.

The key advantage here is that it seamlessly integrates with CI/CD pipelines, enabling each service to be built, tested, and deployed on its own schedule. This independence means teams can release updates to a specific microservice without impacting others, fostering a more agile and responsive development environment.

What you should pay attention to is maintaining backward compatibility, so teams can roll out new features without breaking existing integrations. This approach fosters a stable and predictable infrastructure, enabling businesses to innovate rapidly while minimizing the risk of introducing regressions or compatibility issues.

To optimize communication and managing among independently deployed services, you can consider the event-driven microservices concept. This approach enables asynchronous communication, allowing services to react to events in real time.

By using frameworks, such as Apache Kafka or RabbitMQ, microservices can efficiently handle events and support scalable web applications, complementing the infrastructure provided by containerization and orchestration.

Speed up parallel programming with fixed APIs

This best practice for microservices development architecture allows both speeding up development cycles and minimizing potential errors.

In terms of error reduction, fixed RESTful APIs act as a reliable contract between microservices, ensuring all services know what to expect when interacting with each other. Each microservice within the architecture communicates using predefined endpoints and request structures, leaving no ambiguity about the data being sent or received.

This standardized approach means teams don’t have to check in to clarify API behaviors constantly, cutting down on misunderstandings and potential errors.

On the development side, fixed APIs let teams start the process accordingly, even if the API is not fully implemented. Instead, teams can develop in parallel, confident that the API structure will stay consistent. This prevents bottlenecks and keeps everything running smoothly, especially in large projects with multiple teams, maintaining the effective microservices architecture.

Additionally, fixed APIs help maintain stability during the maintenance. Immutable APIs minimize the risk of errors from unexpected changes. For example, if a developer alters an endpoint to include additional data, this can disrupt other microservices within the architecture that aren’t prepared for such changes. With a fixed API, such issues are avoided, as any modifications require a thorough review and coordination process.

Avoid technology zoo

One of the benefits of microservices architecture is the flexibility to choose different technologies for different services since they operate independently. However, it’s wise not to introduce too many technologies within a single project.

First, having a consistent set of technologies makes it easier for developers to make changes to a microservice that’s not in their area of responsibility when it’s written in the same programming language as their microservices.

Additionally, if there’s a team reshuffle or a key developer who was responsible for a microservice using a niche technology leaves, finding a replacement with the right skills and practice can be challenging and costly.

So, we recommend choosing a few core technologies for your architecture and sticking with them throughout the development process. For example, you might opt for Java with Spring Boot and Node.js. But again, it all depended on application requirements and specifics of the architecture.

Use microservices templates

This best practice comes from personal experience rather than a common development standard and is especially useful with microservices architecture development from scratch or expanding existing microservices.

Templates provide a consistent structure for microservices, ensuring that new services in the architecture align with established standards. They also speed up development by giving developers a clear blueprint, so they don’t have to reinvent the wheel every time they create a new microservice.

The key elements of microservices may include:

- a well-defined folder structure that specifies where each components belong;

- standardized commands for launching services, ensuring all developers use the same processes;

- minimal working functionality that allows developers to launch the service and perform basic operations, such as sending a request and receiving a response.

-

The full-stack developer

from the Expert Soft team says:"It’s not an obligatory thing and relates more to streamlining processes rather than direct microservices architecture development. When I worked on a fintech application, we had to create additional microservices alongside the existing ones, and it was quite a challenge to maintain a similar services structure with different teams. Looking back, I realize that having established microservice templates would have made the process much easier and faster."

Even without the microservices templates, our Expert Soft team successfully implemented new microservices into the application, enhancing the system’s scalability. This was a real upgrade for the top credit rating agency we were working with. Given the sheer volume of information they provided, it was crucial to process and deliver data efficiently, especially when multiple clients were accessing the system simultaneously.

The shift to microservices also accelerated release cycles, allowing teams to focus solely on their areas of responsibility without being held back by other teams or their release schedules.

Summing Up

As you can see, many best practices for microservices aren’t groundbreaking or revolutionary. They’re the tried-and-true principles of creating quality, clean code and architecture that large apps should adhere to. This universality is why, even though we work on diverse business applications at Expert Soft, many of our practices are the same. They are from our practice.

This alignment reflects the strategic approach that our specialists at Expert Soft bring to every project. Our expertise enables us to guide organizations towards building efficient, scalable architectures and robust e-commerce systems.

So, whether you’re just starting with microservices or looking to refine your existing architecture, embracing these principles will set you on the path to success.

Any questions remaining? Feel free to contact us, we would be happy to discuss!

Andreas Kozachenko is the Head of Technology Strategy and Solutions at Expert Soft, responsible for technology strategic guidance and oversight. Andreas has 10+ years of experience with headless commerce architecture across wholesale, retail, and fintech.

New articles

See more

See more

See more

See more

See more