How to Scale an Ecommerce Platform for Peak Sales Events Without Downtime

Every ecommerce platform faces its real test during peak sales: Black Friday, Cyber Monday, or major holiday promos. When traffic spikes, even small inefficiencies can trigger costly downtime. According to the Uptime Institute’s 2023 Outage Analysis Report, more than two-thirds of all outages cost over $100,000, and costs continue to rise as digital dependency grows.

However, what we’ve noticed over the years of working with ecommerce clients is that peak season itself has changed. The traditional one-day flash sale has stretched into full-week campaigns, meaning pressure on systems lasts longer, and instead of one massive crash, failures tend to grow gradually over time. But one thing hasn’t changed: the platforms that survive these events are the ones that prep early and thoroughly.

We’ve guided clients through dozens of high-load events: scaling systems, optimizing performance, and monitoring everything in real time through our ecommerce development and support services. Below, we break down what actually works to prepare for peak traffic and keep platforms fast and stable no matter the pressure.

Quick Tips for Busy People

Here’s how to prep your ecommerce site for holiday traffic in 7 takeaways:

- Prepare from two angles: true stability comes from balancing architectural readiness with operational and data accuracy.

- Invest in scalability early: add nodes and resources in advance, use event-driven workflows to offload heavy operations, and leverage modular system specifics for scaling only high-load processes.

- Keep caching and databases clean and consistent: align cache layers, purge old data regularly, and tune database queries before traffic surges to prevent hidden bottlenecks.

- Data drives trust, not just performance: mismatched prices, stock, or promos break customer trust, even if systems stay online.

- Load shows limits, while stress shows failure: use stateful sessions and failure scenarios to reveal how systems degrade and recover under pressure.

- DevOps makes stability repeatable: IaC, autoscaling, and proactive monitoring turn peak traffic into a controlled process, not a crisis.

Below is how this peak-season preparation should be approached in more detail.

Two Perspectives on Preparing for Peak Traffic

After years of guiding ecommerce platforms through peak sales seasons, we know that stability depends on preparing in two directions at once. It’s what your system delivers when the load hits.

The first is architectural readiness, ensuring the system can scale, stay resilient, and maintain high performance under pressure. This layer is about making sure the platform’s core — infrastructure, application services, and databases — can absorb pressure. It must scale predictably, distribute load reliably, and keep response times stable when demand peaks. Without that foundation, even perfect data or well-configured promotions won’t hold the system together.

The second is operational and data readiness, which is responsible for keeping the business layer accurate and consistent. Product data, prices, promotions, and inventories must all align across channels, because even the best architecture fails if the content it serves is wrong.

These two layers always move together, complementing each other. Now, when the approach is framed, we can break it down from the bottom up, starting with the architecture.

Architectural Patterns for Scalability

Preparing for peak traffic starts with refining the architecture you already have. You can’t “patch in” scalability days before a sale as systems need room to be adjusted, stress-tested, and reinforced in advance. After years of watching platforms under pressure, we’ve seen what holds up and what gives out first.

Horizontal scaling

Sure, throwing more CPU or RAM at the problem helps, but only to a point. When traffic ramps up, especially for longer periods, you need to scale outward, not just upward. That means adding more servers, sharing the workload, and making sure no single service is overwhelmed.

During big sales events, it’s usually busy workflows, like orders, consignments, and shipment tracking, that start to struggle first. We scale these services independently to absorb surges without slowing down checkout or order processing.

The key to scaling is adding resources in advance, not waiting to see where extra resources might be needed. On one of our projects, the client’s IT leadership team decided to scale reactively, adding required nodes only once the system started showing signs of strain. But since node parallelization took time, parts of the business logic became temporarily unavailable, and we had to step in to stabilize processes manually. It was a clear reminder that preparation should always happen ahead of demand, not during it.

-

Pro tip:

Pre-scale manually before the sale, but still enable autoscaling as it’s your safety net when traffic goes beyond predictions.

In SAP Commerce Cloud, nodes and resources configuration is managed in the Cloud Portal. However, default settings usually provide only minimal nodes and memory. In most projects, these defaults aren’t enough for peak traffic, so scaling rules and node adjustments need to be customized in advance as part of the release plan.

Modular and extensible design

In complex ecommerce platforms, performance issues rarely affect the whole system at once, but a specific process, like order placement, promotions, or stock updates. That’s why modular architecture matters: it lets you isolate and scale only the components under pressure rather than expanding the entire application.

In SAP Commerce Cloud, this modularity becomes a real advantage. Its extension-based setup makes targeted scaling straightforward, allowing you to allocate extra resources exactly where they’re needed. So when order processing slows during a sale, there’s no need to scale the entire platform, as you can boost that specific extension and keep the rest of the system running efficiently.

This approach has proven effective on high-load projects, ensuring performance issues stay localized and scaling efforts are more targeted, faster, and less disruptive.

Event-driven architecture

When one process blocks another, the system’s execution threads stall and overall throughput drops. Event-driven design fixes that. Using message queues like SAP Event Mesh, we offload heavy operations, such as payment confirmation, stock validation, fraud checks, and inventory updates, into asynchronous flows.

Checkout remains synchronous for consistency, but everything else runs in parallel, preventing user-facing requests from competing with background jobs. This reduces response times, keeps checkout stable, and prevents queues from growing uncontrollably, even when traffic increases tenfold.

Headless approach

A few years ago, it was common for ecommerce platforms to keep everything under one roof: storefront, back-end, inventory logic, etc. It made sense early on, helping teams move fast. But as systems grew larger and traffic volumes increased, this setup started creating more problems than convenience. Take SAP Commerce Cloud, for example. Its built-in CMS works well in the beginning, but during peak sales, it puts an extra load on the core commerce engine, which ends up handling both content and transactions at once.

That’s why we’re now seeing more and more teams separating these layers to reduce pressure and gain flexibility. Many are moving to a headless setup. The front-end is separated from the back-end, content gets delivered through dedicated headless CMS platforms like ContentStack or Amplience, and each part can scale on its own without putting the whole system under pressure.

As a result, the infrastructure becomes leaner, with fewer sync dependencies, faster response times, and easier scaling when traffic spikes.

Caching strategy

Caching is one of the most efficient ways to reduce load and improve response times, but only when it’s structured and aligned across all layers.

In SAP Commerce Cloud, caching works on multiple levels: CDN, front-end (e.g., Spartacus), middleware, API, and database, which adds complexity and may introduce pitfalls. For example, in one of our projects, all five layers were enabled, but their TTLs weren’t synchronized. As a result, some users saw updated prices while others still saw old ones simply because different cache layers expired at different times.

That’s why, before any high-traffic event, we always review caching end-to-end: how long data lives, where invalidation is triggered, and whether all layers refresh together.

When this isn’t done, we repeatedly see similar issues:

-

Misaligned TTLs across layers

Different cache expiry times lead to mismatched prices, outdated images, or product data inconsistencies on the storefront.

-

Cache not purged after catalog updates

If the cache isn’t cleared after updating products or content, users keep seeing old data even though the back-end is correct.

-

Promotions and pricing logic not cached properly

Promotions are often ignored in caching strategies, which causes slow recalculations or incorrect discounts under load.

-

Relying on application-level cache without CDN invalidation

Without proper CDN rules, the system keeps serving outdated assets. And instead of helping, cache becomes a source of errors.

A simple but effective practice to avoid caching mistakes is to move all static media: images, banners, videos, to a CDN instead of routing them through the core commerce system. This offloads thousands of unnecessary requests and keeps page rendering predictable during peak traffic.

Explore when cache introduces real production issues and how to avoid them when tuning your caching before peak traffic. Download our whitepaper on cache misuse in ecommerce

Database optimization

During peak traffic, the database is typically the earliest pressure point in the system: the database is hit with thousands of simultaneous reads and writes, and the load grows exponentially. To ensure the database will handle a high-load period, we focus on three main tactics:

- Read/write splitting to distribute queries across replicas.

- Index tuning for the most frequently accessed attributes.

- Query refactoring to avoid nested joins and overfetching.

From experience, cleaning up slow queries before scaling hardware pays off since it frees resources, cuts waste, and makes any additional infrastructure actually count. A good example is when a global healthcare ecommerce platform used localized attributes for every product field, creating millions of redundant DB entries and massive joins. After separating localized and non-localized data and caching frequent configs, response times dropped from five seconds to under 90 milliseconds, and query counts fell by nearly 100x.

All this means that true scalability doesn’t always come from adding more resources. Often, it starts with rethinking how data moves through the system.

Operational and Data Readiness Checklist

Once you know the system can handle the load, the next thing to focus on is the data behind it. Solid infrastructure keeps everything running, but it’s clean, reliable data that keeps it working properly when things get intense.

That includes the basics: prices, descriptions, and images must match across all connected systems. It sounds obvious, but during peak traffic, even a small mismatch can snowball into support tickets, pricing disputes, or refund chaos once orders start rolling in.

Before every sales event, we run a full operational readiness check to make sure the business logic is just as strong as the tech layer behind it.

One of the first things we do is check inventory levels for high-demand or flash-sale products. But what really makes a difference is setting clear communication rules and alert thresholds. That way, if stock starts to drop or an item is close to selling out, both the system and the team can respond in time, not after it’s too late.

This is where stock alerts and bundle rules become essential. When one product in a bundle is close to selling out, the system should trigger an alert or apply predefined rules automatically. These rules might pause the bundle, adjust availability, or notify the right teams early enough to react before it impacts customers.

Promotions are another area that needs close attention ahead of peak season. We predefine and manually test promo rules well before the launch, using controlled deployments or A/B testing to validate discount logic and display accuracy.

Our team can assess your platform and fine-tune performance so everything runs smoothly when it counts.

Let’s talkLoad Testing for System Readiness

Reliable performance during peak season is the result of weeks of controlled testing and fine-tuning. Before any campaign goes live, we replicate real conditions as closely as possible, pushing the system to its limits in a safe environment. This process helps us spot weak points early, long before customers do.

We follow the same structured approach, focusing on both realism and precision:

-

Test for peak, not average

Traffic spikes don’t follow smooth curves. We use production-like environments and simulate maximum load, not the expected median, to understand how the system behaves at its true limits.

-

Monitor the right metrics

We track response times, error rates, queue lengths, CPU and memory usage, and database latency — the indicators that show when performance starts to degrade.

-

Run incremental tests

We begin with moderate loads, analyze bottlenecks, make adjustments, and gradually raise the pressure. This helps pinpoint exactly where performance drops and why.

-

Automate reports

Every test run produces an actionable report with clear metrics and recommendations. This ensures the team gets clear, reliable data they can use to fine-tune configurations before launch.

If done right, load testing tells you not just whether your system can handle peak traffic, but how it handles it and where to reinforce before the real crowd arrives.

Stress Testing for Peak Season Readiness

Within stress testing, most teams only test the happy path: browse, add to cart, place an order. That’s the baseline. To be truly ready for peak traffic, you need to go beyond it. Push the system past its limits, simulate failures, and see how well it recovers. Below is how we approach that.

Understanding real-world behavior before stressing the system

Every test starts with understanding how people actually use your site. We study analytics: how users move through categories, how long they spend comparing products, how many reach checkout, and then turn that behavior into test patterns.

Using APM tools like Dynatrace, we model these sessions with realistic steps and time gaps, not random bursts of API calls. Realistic scenarios expose the parts that truly impact conversion, like cart persistence, promo validation, and stock checks.

Designing stateful user sessions

In ecommerce, stateless tests, where each request is isolated and doesn’t retain session data, give a false sense of security. They check system response times but ignore how real sessions accumulate data, consume memory, and create interdependent transactions.

When we run stateful tests, each virtual user maintains a persistent session, meaning their actions and data persist throughout the journey. This process engages the full stack: pricing, inventory, tax, and order management systems.

This approach is especially critical for testing carts, promotions, and checkout flows, where multiple systems interact and data consistency is key. Only with stateful sessions can you see how the system behaves when thousands of active baskets are open at once and whether performance degrades over time.

Incorporating think times and pauses

Users aren’t bots. They browse, compare, and hesitate. That human rhythm matters when you’re simulating real-world load. If every virtual user clicks at the same millisecond, you’re testing an unrealistic surge that tells you nothing about your real bottlenecks.

We insert randomized think times, typically between 3 and 7 seconds, to reflect how people actually shop. This helps create a realistic wave pattern, where load ebbs and flows naturally, rather than a flat, artificial pressure line.

Testing failure and recovery scenarios

Peak readiness is about how quickly you get back up when something goes wrong. That’s why we include controlled failure testing, simulating scenarios like:

- Inventory running out mid-checkout;

- payment rejections or gateway timeouts;

- abandoned carts piling up during high traffic.

The goal here is to see how the system recovers, whether data remains consistent, and how long it takes for services to stabilize. It’s a controlled form of chaos engineering that validates whether recovery mechanisms perform reliably in real-world scenarios.

Gradual load increase and monitoring system behavior

Instead of pushing the system to maximum load right away, we scale it up step by step and watch for the moment things start to slow down, like growing latency, overloaded thread pools, long queues, or slower database responses. Tools like Dynatrace and SAP Cloud Portal let us monitor everything in real time, including CPU and memory usage, thread pools, queue size, and database performance. That way, we know exactly when the system starts to feel the pressure.

Considering device, location, and network variability

Some testing guides recommend simulating traffic from different devices, regions, and network conditions. It might be useful in theory. But from our experience, it rarely pays off in enterprise ecommerce. Creating and maintaining complex scripts to mimic these variations adds significant QA overhead without meaningfully improving performance insights. Most critical bottlenecks become visible without this extra layer of complexity, so we don’t prioritize it in stress testing.

If you’re worried about integrations failing when traffic surges, get our whitepaper on integration-ready ecommerce — practical strategies to keep systems connected and stable.

DevOps Practices for Reliable Peak Season Deployments

Even the best-tuned architecture can fail if deployments are shaky. That’s why DevOps is a key part of peak-season prep. It helps keep deployments stable and the infrastructure reliable when traffic hits its highest point.

Real-time monitoring and alerting

Monitoring is a shared responsibility across engineering teams. Before peak season, we set up a layered system of alerts that keeps both technical and business indicators in check.

We typically track three levels of data:

- System metrics: CPU utilization, memory, thread counts, and database health indicators.

- Application metrics: API exceptions, checkout errors, and queue performance.

- Business metrics: failed order counts, missing promotions, or sync delays between systems.

When monitoring is configured well, DevOps may go through an entire sale without a single alert — a sign that scaling thresholds were set and tested properly. The point isn’t to react faster but to detect small degradations before they grow.

Autoscaling and infrastructure as code (IaC)

Manual scaling might work in early development, but not when thousands of customers are checking out simultaneously. Automation takes over here. Using tools like Terraform or AWS CloudFormation, DevOps specialists can configure, deploy, and update environments quickly and consistently.

The effective approach is to create scaling scripts in advance, as the rule of thumb is that if it isn’t scripted, it turns into a manual task, and manual changes under load are where things often go wrong.

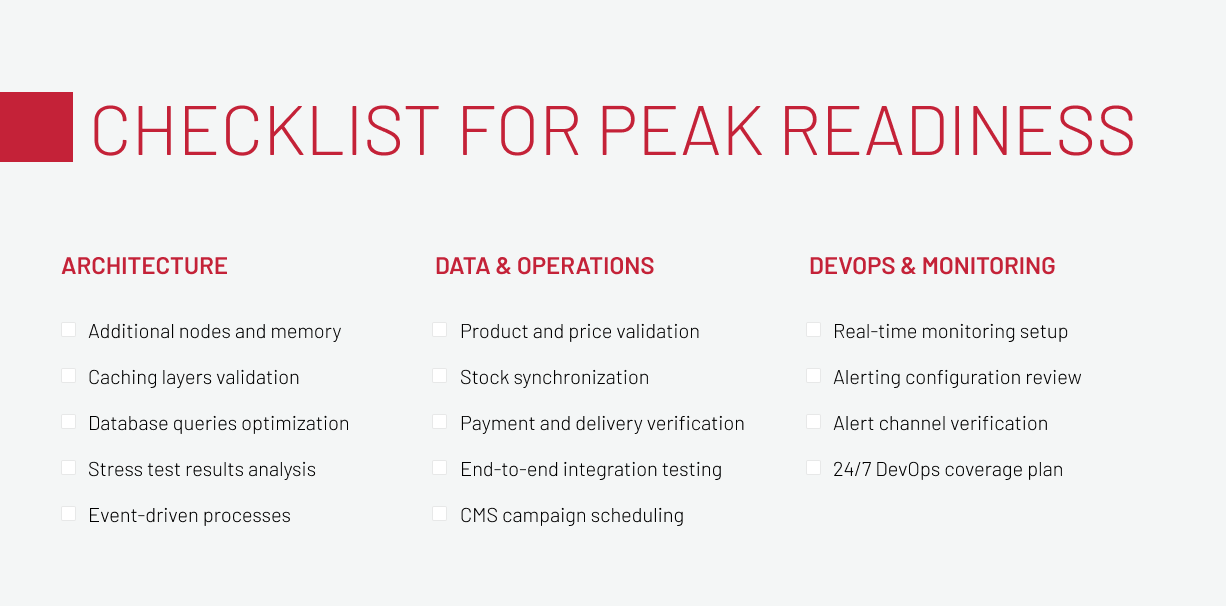

By this point, most of the work is done. But before we call the system ready, we still go through a checklist — not to plan things out, but to make sure everything critical is already in place.

Multi-Level Checklist for Peak Season Readiness

Before a major sales event, every part of the system should go through a final readiness review. So here’s a structured ecommerce checklist for high traffic to make sure no weak link remains before the first surge of traffic hits.

Architecture & infrastructure:

- Extra nodes and memory are configured in advance through SAP Cloud Portal.

- Caching layers (CDN, object, and application) are reviewed and validated for consistent TTLs and performance.

- Database queries are optimized and indexes updated to remove potential bottlenecks.

- Event-driven processes are checked to ensure that queues can handle burst loads without delays.

- Stress-test results are analyzed, and configurations are fine-tuned based on real data from testing.

Data & operations:

- Product data, prices, and promotions are fully validated across systems.

- Stock synchronization with ERP systems and real-time availability updates are confirmed.

- Payment and delivery integrations are tested end-to-end to ensure no unexpected failures.

- CMS campaigns and cron jobs are reviewed for correct timing and visibility during the event.

DevOps & monitoring:

- Real-time monitoring and alerting are configured in Dynatrace or Cloud Portal.

- Autoscaling and Infrastructure as Code (IaC) scripts are tested to confirm dynamic scaling works as planned.

- Alert channels and thresholds are verified so the right people get notified immediately.

- A 24/7 DevOps support plan is in place, ensuring constant coverage throughout the sales event.

When every layer passes this checklist, the system can handle peak demand with confidence and predictability.

To Sum Up

Smooth handling of peak performance doesn’t happen by accident. It comes from the engineering discipline: scaling infrastructure ahead of demand, testing under real conditions, automating recovery paths, and validating data before the first order hits.

But technology alone isn’t what keeps systems online. The smoothest peak seasons we’ve seen were the ones where engineering, QA, DevOps, and business teams prepared together, made decisions based on real data, and knew how the system would behave before customers arrived.

Expert Soft’s team works on supporting enterprise ecommerce systems through some of their busiest seasons with zero downtime, combining scalable architecture, precision, and data accuracy. And if you’d like to discuss how to prepare your platform for upcoming high-load events, you can reach out to our team anytime.

Kate Savastsiuk, Head of Digital Transformation and Customer Experience at Expert Soft, draws on extensive collaboration with enterprise teams to explore how leading ecommerce businesses scale their platforms for peak sales events while avoiding downtime.

New articles

See more

See more

See more

See more

See more