The Hidden Costs of Storing Stale Data in Enterprise Platforms

As systems scale, data builds up fast. At some point, tracking what’s fresh, what’s duplicated, and what should’ve been deleted starts to slip through the cracks. Cleanup mechanisms might’ve been set once or maybe not at all. Either way, an average of 55% of organizational data ends up stale.

These outdated records quietly linger in the background, slowing your system down, inflating infrastructure costs, and creating invisible risks. What’s worse is that this stale data hides in more places than you’d expect.

At Expert Soft, we regularly step into enterprise ecommerce platforms with performance or cost-reduction goals. Almost every time, we uncover stale data buried in unexpected parts of the system. Once removed, the platform runs leaner, faster, and becomes significantly cheaper to maintain.

This article is designed to surface those blind spots. You’ll see where data staleness tends to accumulate, how it impacts your system’s performance and cost, and how to start getting it under control before it eats up even more of your resources.

Quick Tips for Busy People

Here are the main points to keep in mind:

- It’s never in just one place: old records creep into caches, sync jobs, and legacy systems where you don’t expect them.

- Stale data compounds over time: the longer it’s ignored, the more it inflates costs, degrades performance, and clogs decision-critical workflows.

- “Keeping it just in case” backfires: holding data you don’t need only raises your exposure to compliance and security risks.

- Real-time dashboards don’t mean real-time data: sync delays between analytics and transactional databases mean dashboards display yesterday’s KPIs, while leadership thinks they’re looking at live numbers.

- Stale data forces smart teams into firefighting mode: engineers end up correcting the same issues over and over again. These are symptoms of data that’s out of sync, outdated, or never cleaned up in the first place.

- This isn’t a side task: delayed updates affect everything from customer trust to revenue operations, which makes fresh data a leadership concern.

Let’s take a closer look at what stale data is and the high-risk types it can take.

High-Risk Types of Stale Data

Outdated data comes in different forms, each carrying its own risks. Some kinds mislead decision-makers, others add unnecessary weight to infrastructure, and others create compliance and security liabilities. In enterprise systems, the most high-risk categories include:

-

Stale data

information that is no longer current but is still being used. Think of old product prices, inactive promotions, or outdated stock levels that remain visible. This misinformation leads to incorrect transactions, misleading dashboards, and poor strategic decisions.

-

ROT data (Redundant, Obsolete, Trivial)

ROT data, meaning duplicated records, expired entries, or irrelevant logs that clutter systems over time, might look harmless, but it increases storage costs and degrades performance, making every query and index slower.

-

Dark data

data collected but never used, such as unprocessed logs or archived reports. It consumes resources without adding value, and worse, it introduces security and compliance exposure when left unmanaged.

-

Inconsistent data

conflicting values across multiple systems, like mismatched product information between a PIM and a storefront. These inconsistencies result in customer dissatisfaction, order errors, and frequent integration failures.

-

Orphaned data

records that have lost their link to an active entity, such as promotions attached to products that no longer exist. They carry risks of corruption, add unnecessary processing overhead, and create noise in reporting systems.

But understanding the types and stale data meaning is only half the picture. The real problem is knowing where it hides inside complex enterprise systems.

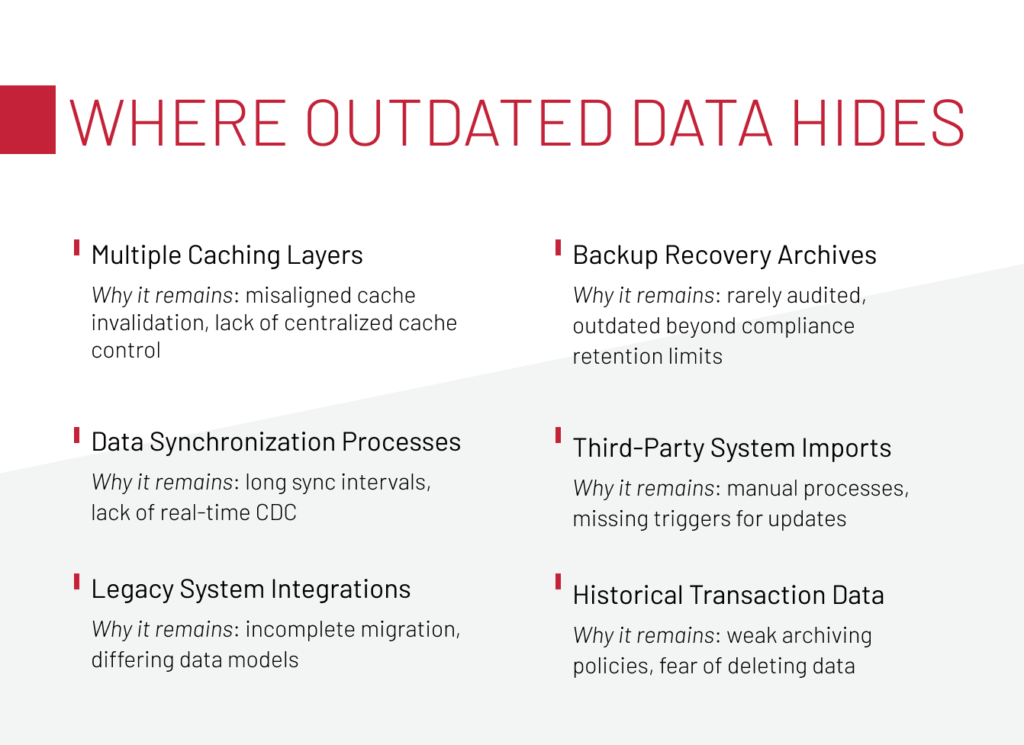

Where Stale Data Hides

One of the biggest challenges is that outdated data rarely exists in an established set of obvious places. Instead, it can hide across different layers of enterprise platforms, where it undermines operations. Some of the most common hiding spots are:

-

Multiple caching layers (CMS, storefront, middleware)

Without centralized cache control, invalidation rules often misalign. As a result, you get outdated product information or promotions appear in customer-facing channels, long after they should have been replaced.

-

Data synchronization processes

Long synchronization intervals and the lack of real-time change data capture (CDC) leave systems running on delayed information. This way, dashboards and decision-making processes rely on outdated snapshots instead of real-time data.

-

Legacy system integrations

When migrations are incomplete or data models differ, legacy records remain in circulation. These fragments cause inconsistencies and errors that ripple across the system.

-

Historical transaction data

Weak archiving policies or fear of deleting records mean years of orders and returns continue to live in production databases. The result is degraded query performance and longer processing times.

-

Third-party system imports

Manual imports and missing update triggers allow stale data from external systems to enter platforms unchecked, creating mismatches in availability, pricing, or delivery timelines.

-

Backup & disaster recovery archives

Backups are rarely audited, and outdated datasets linger far beyond compliance retention periods. This not only adds storage costs but also increases regulatory risk.

If you’d like to discuss where outdated records might be draining your system and how to cut those losses, our team at Expert Soft is open to talk.

The bad news is that all those hidden pockets of stale data come at a cost.

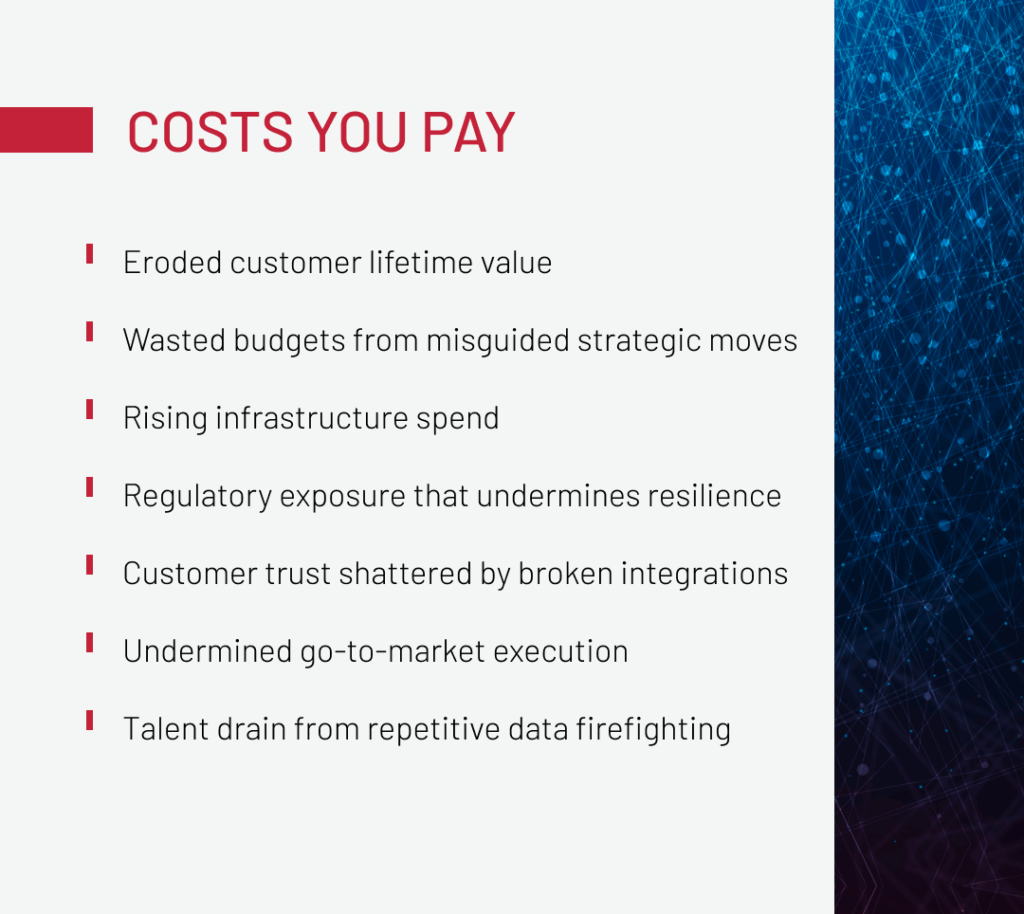

Costs You Pay for Stale Data

When data goes stale, the impact shows up in real money lost, wasted effort, and broken trust. What follows is where those costs appear and why leaders can’t afford to ignore them.

Eroded customer lifetime value

When data goes stale, customers notice right away. Loyalty balances don’t add up, product availability shifts between browsing and checkout, and prices fail to match what was promised. Each of these creates the same result: trust erodes fast.

In one enterprise setup, the CRM displayed outdated loyalty points after login because updates were processed asynchronously. Customers thought their balances had vanished, creating confusion and frustration. Support calls spiked, adding extra costs on top of lost goodwill.

The fix was simple but decisive: a back-end request was triggered whenever users accessed personal data pages, ensuring loyalty points and other account details were always accurate in real time.

Wasted budgets from misguided strategic moves

In one high-load setup, a one-day data sync from MySQL to MongoDB often led to cases when leadership saw outdated data and made decisions they believed were real-time. By the time mistakes became visible, the chance to correct them had already passed.

That’s the trap with stale data: it gives you a false sense of clarity. Dashboards that seem precise can actually steer strategy off course if the underlying metrics lag behind reality.

Expert Soft’s team fixed it by moving to real-time change data capture with AWS DMS and Kafka. Once the gap disappeared, dashboards became what they were supposed to be: tools for spotting issues as they happened, not long after.

Rising infrastructure spend

Historical data has value, but when it piles up in the wrong place, it can cripple performance.

In our experience, when a high-load ecommerce platform kept years of orders and returns in its production database, queries slowed, indexes bloated, and batch jobs dragged on, while infrastructure costs climbed.

The fix was smart archiving: older records were moved out of production, and once retention periods expired, they were deleted. This brought system speed back to normal and significantly cut operating costs.

Regulatory exposure that undermines business resilience

Unfortunately, stale data introduces more severe risks than degrading performance. For any enterprise system, retaining personal data beyond required periods creates both compliance and security vulnerabilities. Treating archiving as a governance practice helps avoid costly penalties and reputational damage.

Customer trust shattered by broken integrations

High-load platforms rely on numerous integrations and real-time sync between systems to maintain order integrity, stock visibility, and delivery accuracy. But when outdated or misaligned data flows through, it disrupts the entire experience.

During one high-volume sales event, outdated stock and delivery information exposed the risk. Customers placed orders for items that appeared available but weren’t. The fallout was immediate: cancelled transactions, failed checkouts, and mounting frustration that drove a surge in support requests.

By introducing early validation layers and automated cart-cleanup scripts to remove outdated records, the transaction flow stabilized and errors no longer cascaded through the system.

Undermined go-to-market execution

Promotions and offers only work if they reach customers on time, yet stale data can sabotage this process at scale. Instead of speeding up growth, it blocks campaigns before they even launch.

In one multi-layer architecture built on SAP, Spartacus, and Node.js, cache conflicts left outdated product prices live while new promotions were stuck unseen. Planned campaigns stalled, customers saw misleading information, and trust was undermined.

We restored execution by aligning cache resets across systems, refining cache layers, and adding a dedicated “no-cache” flag for promotions. These changes reestablished timing and accuracy, allowing go-to-market activities to run as intended.

Talent drain from repetitive data firefighting

Not only does stale data slow systems but also drain people. Customer service staff end up correcting order errors, engineers patch mismatches by hand, and business users spend hours validating transactions that should never have failed in the first place.

This constant firefighting mode consumes valuable hours and shifts focus away from meaningful projects. Prevention is the real answer: automated validations catch errors early, while strict retention policies ensure unnecessary records are removed. With these measures in place, teams can focus on strategic improvements instead of endless corrections.

While these costs may sound a little lofty, it’s easy to underestimate outdated records until you put numbers against them.

Let’s Calculate the Cost of Stale Data

This example is hypothetical, but it captures the real-world cost we see all too often. Imagine a high-load ecommerce platform processing millions of transactions each month. The system has not been optimized, so stale data builds up across critical areas:

- Customer service: outdated loyalty points trigger confusion, and support calls surge. Instead of a manageable average volume of 1,500 calls, agents are handling 10,000 calls a month tied directly to stale records. At $7 per call, that’s $70,000 gone.

- Infrastructure: historical orders and returns clog the production database. Query times drag, batch jobs extend, and just keeping the lights on demands 300 extra database hours a month. That’s another $15,000 added to the bill.

- Integration flow: Stock and delivery mismatches cause cancellations, each one creating both direct costs and wasted staff time.

Here’s how those numbers stack up in practice:

In this scenario, proactive stale data management saves $73,000 per month. Multiply that across a year, and you’re looking at nearly a million dollars that would otherwise be wasted without generating any business value in return.

Explore practical approaches to reducing infrastructure expenses in high-load ecommerce systems.

Download whitepaperNow that the cost is clear, the next step is prevention.

Best Practices to Prevent Stale Data Accumulation

The most effective way to deal with stale data is to stop it from accumulating in the first place. In complex stacks, prevention is always cheaper and more reliable than reactive cleanup. The following practices have proven to be the most effective:

-

Implement real-time data synchronization

Relying on long batch syncs creates unavoidable lag, leaving dashboards and operations behind reality. By applying change data capture (CDC) and event streaming with tools, such as Kafka, Debezium, or AWS DMS, systems stay in sync across services. This eliminates the “day-old data” problem and ensures decision-makers always see current metrics instead of outdated snapshots.

-

Centralize cache management

Multiple caching layers in CMS, storefront, or middleware tend to drift apart. Without unified control, invalidation rules misalign, and outdated promotions or product details remain visible. Centralizing cache management and coordinating resets across all layers prevents those conflicts, ensuring customers see only accurate, up-to-date information.

-

Enforce data retention & archiving policies

Old orders, logs, or personal records often linger in production far past their useful life. Enforcing retention policies and moving or deleting records once their compliance window closes keeps production lean. Smart archiving separates history from live data, restoring performance while reducing regulatory exposure from holding unnecessary personal data.

-

Automate data quality checks

Relying on manual reviews means stale or inconsistent data will slip through. Automated checks scheduled at regular intervals detect discrepancies before they spread across systems. By resolving issues early, teams avoid the firefighting cycle of endless corrections and keep operational focus where it belongs.

-

Design fail-safe imports & exports

Imports from third-party systems are one of the most common entry points for stale data. Designing fail-safe processes using durable queues with retries, such as ActiveMQ with state persistence in Redis, ensures incomplete updates don’t sneak through as “valid” records. This prevents downstream errors before they begin.

-

Audit integrations & analytics feeds

Third-party integrations and analytics tags are rarely set-and-forget. Misconfigured or outdated feeds silently introduce bad data into core systems. Regular audits of imports, exports, and analytics pipelines ensure external sources remain accurate, preventing unnecessary mismatches and failures.

-

Provide early validation in user flows

Catching errors after a customer clicks “buy” is too late. Adding early validation into checkout and pricing flows ensures that stock levels, prices, and delivery availability are verified before the order is finalized. This reduces cancellations, restores trust, and keeps costly support calls to a minimum.

Taken together, these practices shift data management from reactive cleanup to proactive control.

To Sum Up

When years-old transactions slow down queries, caches serve outdated prices, or integrations fail because of stale stock data, the cost is real. Extra infrastructure hours, rising support volume, and wasted staff time all add up quickly.

Expert Soft’s team has been working inside these high-load environments and knows exactly where stale data hides. By isolating and eliminating it, we help enterprises cut both resource and infrastructure costs while keeping platforms stable and responsive.

If these issues sound familiar, let’s talk and uncover where the hidden costs are coming from and how to stop them.

New articles

See more

See more

See more

See more

See more