Key Steps to Ensure Data Integrity During Legacy System Migration

For many enterprises and mid-size companies, the decision to migrate from a legacy system comes with one persistent fear of losing data along the way. When you’re dealing with massive, business-critical datasets, even the smallest gap can turn into a cascade of problems. The worst part is that sometimes you don’t even know something’s missing until the damage is done.

While ensuring that not a single critical record is lost is never simple, it’s far from impossible. Having successfully guided complex enterprise migrations, we at Expert Soft know exactly where the risks hide and what steps make the difference between a smooth transition and a post-migration headache.

It’s not always possible to point to one or two universal trouble spots that every migration will encounter. Each system is different. What we can do is highlight the most common risk areas and early warning signs that deserve extra attention, so you can minimize disruptions and keep your migration process as smooth as possible.

Quick Tips for Busy People

Data migration for legacy systems requires a strategic approach. Here are some key areas to focus on to ensure a smooth transition:

- Focus on data meaning: ensure your team understands the true meaning behind data fields, not just their names, to avoid confusion.

- Align business logic: make sure business rules behind fields are consistent across systems to prevent integrity issues.

- Unify codes across systems: standardize codes, e.g., region identifiers, to ensure smooth data flow and avoid misclassifications.

- Migrate in phases: break the migration into stages to reduce risk and allow early detection of integrity issues.

- Plan for rollback:ensure every batch is documented with metadata so you can quickly revert if issues arise.

- Integrate validation layers:use automation, dashboards, and human oversight to cover different angles and catch all potential integrity issues.

With these strategies in mind, let’s explore how to keep data integrity during the migration process.

Where Integrity Usually Breaks During Migration

Data integrity doesn’t usually fail all at once during migration. It happens gradually, such as with missed data mappings or overlooked edge cases. The sooner you catch these issues, whether during testing or early transfers, the easier and cheaper they are to fix. If left unchecked, they might turn into expensive problems after launch.

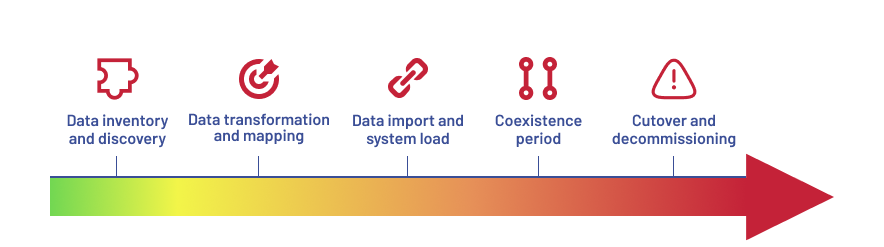

Let’s walk through the entire migration process and highlight the stages where data can get lost, as each step comes with its own set of risks.

Data inventory and discovery

The first cracks often appear before any code is written. This is the phase where teams should identify what data exists, what it means, and how it behaves. However, this step is sometimes treated as a simple inventory exercise. Teams skim over the structure and assume that matching names guarantees matching logic.

What breaks:

Critical business fields can look the same across systems but have different default values, constraints, or implications. Older platforms often carry hidden assumptions, for example, using a missing “region” may imply a default country. A modern system might see that same field as empty or missing, disrupting everything from personalization to tax logic.

In a project with a beauty and cosmetics retailer, what seemed like regular CMS data included deeply embedded configuration logic that wasn’t documented. This led to significant sync problems between the old front-end (Hybris Accelerator) and the new one (Spartacus).

The main issue was the inconsistent structure of CMS components. This was caused by hidden configuration logic that was not accounted for in the initial inventory. Legacy systems often contained unspoken assumptions. For example, missing “region” fields were taken as a “default country” in the new system. This led to synchronization failures.

What to watch for (technical checks):

- Business rules embedded in data (“0” = inactive vs “0” = active)

- Deprecated fields still active in legacy edge-case logic

- Incomplete documentation of field dependencies

Data transformation and mapping

This is the stage where data is extracted and restructured to fit the target schema. The issue is that fields are mapped by name or type, not by how they work within the business logic.

What breaks:

Fields that look similar may behave differently. For data migration from legacy systems, inconsistent regional codes or tax rules can lead to errors in pricing and reward logic. Hardcoded values and computed fields remain undocumented, resulting in significant discrepancies after import.

During a loyalty program migration for a luxury goods brand, inconsistent regional codes (“TR” vs “TUR”) and mismatched tax rules led to errors in pricing and reward logic. These problems weren’t identified during QA. They only appeared when customers started to use the system.

What to watch for (technical checks):

- Hardcoded values in legacy logic (e.g., if store = ‘001’, apply discount)

- Fields that derive from runtime calculations

- Category or enum values that don’t map 1:1

Data import and system load

Once the data is transformed, it is loaded, often in bulk. This is where structural assumptions can fail. If validation and dependency ordering are not strict, records can be lost, duplicated, or corrupted without notice.

What breaks:

In legacy data migration, product attributes from older systems may be localized and reused. When merged into a unified model, ID collisions can cause products to display incorrectly across markets. Each of these issues can lead to data inconsistencies and sync problems down the line. In practice, this means data loads may “succeed” while quietly introducing inconsistencies that only become apparent when they disrupt production processes.

We observed this during a catalog optimization for a global healthcare manufacturer. Legacy product attributes were localized and reused. When merged into a unified global model, ID collisions caused products to display incorrectly across markets, affecting pricing, availability, and categorization.

What to watch for (technical checks):

- Entity loading order (e.g., categories → products → promotions)

- ID collisions (especially for reused SKUs or customer numbers)

- Loss of audit trails or timestamps in overwrite scenarios

Parallel operation (coexistence period)

To reduce risk, many teams choose to run the old and new systems side by side for a period. This coexistence phase introduces a different set of integrity risks related to synchronization, consistency, and control over the “source of truth.”

What breaks:

Sometimes, data changes in one system don’t make it to the other, especially when real-time syncing fails under heavy load or doesn’t cover all fields. Users might update records in both systems without realizing it, which can create unnoticed gaps. Over time, these gaps can mess with important things like pricing, stock, or customer status.

During a front-end migration for a large retail chain, both the old JSP site and the new Spartacus front-end were running side by side. But customer profile updates, like address changes or loyalty status updates, didn’t always sync properly between the two. This caused personalization to break down and led to rewards being calculated incorrectly. Without real-time sync and a clear “source of truth,” it was tough to keep the customer data consistent.

What to watch for (technical checks):

- Are you writing to both systems during coexistence?

- What’s the lag between updates across systems?

- Can users place orders or update data in both environments?

Cutover and decommissioning

The final step is fully launching the new system and shutting down the old one. This is when unresolved integrity issues come to light. This stage often has no easy way to revert changes, which is why you should do your best to spot the issues earlier.

What breaks:

Scripts written for the cutover may change records in ways that cannot be reversed. Monitoring might not be strong enough to spot issues under real user traffic. If problems weren’t identified during the parallel operation, they now appear in production, where failure is most costly.

While migrating infrastructure to Amazon EKS for a global financial platform, the technical switch went smoothly. However, due to subtle misconfigurations in URL routing, several downstream integrations failed quietly in production. Because rollback mechanisms were available, the team was able to revert and fix the issue before wider impact.

What to watch for (technical checks):

- Cutover scripts that alter records permanently

- Final consistency checks across key entities (orders, stock, pricing)

- Rollback plan and clear ownership in case of an incident

Want to learn how we handle complex system migrations without sacrificing data integrity?

Let’s talk!Common Mistakes That Compromise Data Integrity

Even in well-designed environments, data integrity issues often come up during migration. This happens because of hidden semantic mismatches, undocumented logic dependencies, and different ways of interpreting data across systems. Here are the common failure patterns we’ve seen in production, along with structural methods to address them.

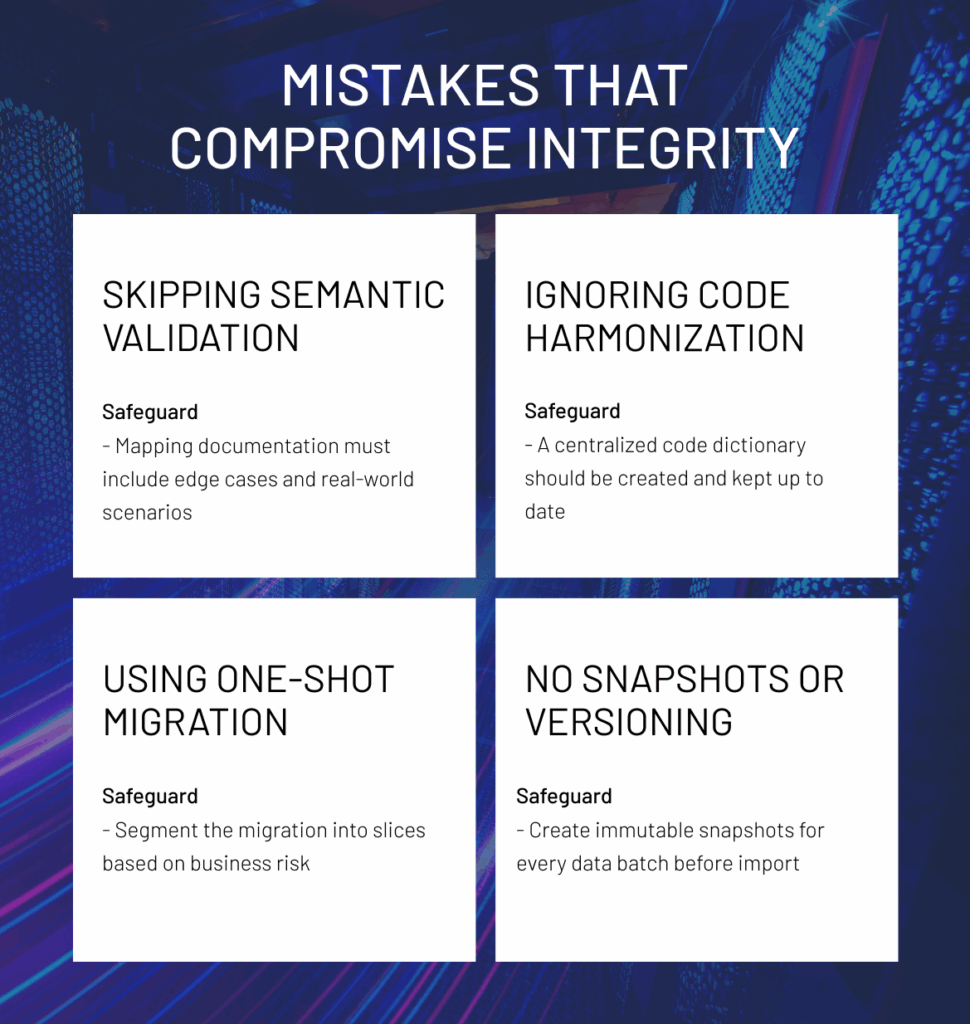

Skipping semantic validation

When two fields look the same, it’s easy to think they are the same. That’s where things start to go wrong.

In many migrations, teams often match fields based on name and format without checking their actual function. For example, a value like “0” might mean “inactive” in one system and “active” in another. These differences don’t appear in validation scripts, but they can cause major problems in business logic.

During a front-end replatforming for a global beauty brand, CMS fields had the same names in each region. However, each region had different rules for showing discounts and banners. After the migration, customers saw outdated promotions because the underlying behavior changed, even though the field structure remained unchanged.

Recommended safeguards:

-

Field mapping should consider behavioral logic

Mapping should show functional similarity, not just schema compatibility.

-

Mapping documentation must include edge cases and real-world scenarios

This means recording value ranges, conditional logic, dependencies, and any exceptions that change how data is processed or understood.

-

Simulation tests using past data help confirm that the meaning is correct

By running previous transactions or user actions through the new system, teams can spot any mismatches in behavior before moving to production.

Ignoring code harmonization

Some mismatches don’t break the system. But they lead to misclassified data, incorrect prices, and broken analytics pipelines.

To avoid this class of failure:

-

Alignment should start early in the migration process.

Code sets, such as region identifiers, customer types, or product categories, must be reviewed across all integrated systems. Audits should identify any inconsistencies or overlaps.

-

A centralized code dictionary should be created and kept up to date.

This dictionary acts as the single source of truth for all shared identifiers. It reduces confusion and prevents hidden logic failures later on.

-

If one-to-one mapping isn't possible, add a translation layer between systems.

This will ensure that data flows correctly, even when codes differ, without making structural changes in either the source or target environments.

Ignoring code harmonization

Big Bang migrations may seem like a smart move to minimize coordination effort. But this migration type assumes everything works perfectly: schema alignment, data integrity, real-world load. They rarely deliver on that assumption.

We’ve supported teams that planned for a full cutover in one deployment. In the best cases, they had parallel export logs that caught issues mid-transition: duplicate loyalty records, desynced inventory, orders stuck in inconsistent states. In the worst cases, they had no rollback and spent days triaging production issues that could have been caught with incremental validation.

Better patterns working in practice:

-

Segment the migration into slices based on business risk

It can include region, product line, or entity type. This allows for targeted validation and limits the blast radius of potential issues. This approach also enables teams to prioritize high-impact areas and allocate testing resources more effectively.

-

Continuously compare live data outputs from both legacy and new systems

During the coexistence period, matching record counts, value distributions, and transaction flows in real time helps detect discrepancies before users are affected.

-

Insert consistency checks between each migration phase

These should include schema validation, referential integrity tests, and business rule assertions to prevent unresolved drift from propagating downstream.

No snapshots or versioning

We’ve seen migrations that push data directly into production without any record of what came before. If something gets corrupted, there’s no baseline to recover from, and no version history to trace the problem back. That’s operationally unsustainable.

Snapshotting and metadata versioning, in turn, allow teams to isolate problems and revert small segments without bringing the system down.

Build your rollback strategy around these fundamentals:

-

Create immutable snapshots for every data batch before import.

These snapshots provide a reliable recovery point in case of corruption, mismatched mappings, or failed dependencies during processing.

-

Tag each record or batch with specific metadata

It should include the source system, timestamp, and migration tool version. This way, you enable precise traceability and simplify root cause analysis when issues arise.

-

Implement reversion tooling that allows partial rollbacks.

Full system resets are often unnecessary and disruptive, while targeted rollback by batch, entity type, or time range is more efficient and reduces downtime.

Check out our whitepaper on building scalable retrieval in complex stacks. It covers our approach to managing snapshot logic, rollback architecture, and high-volume validation during live migrations.

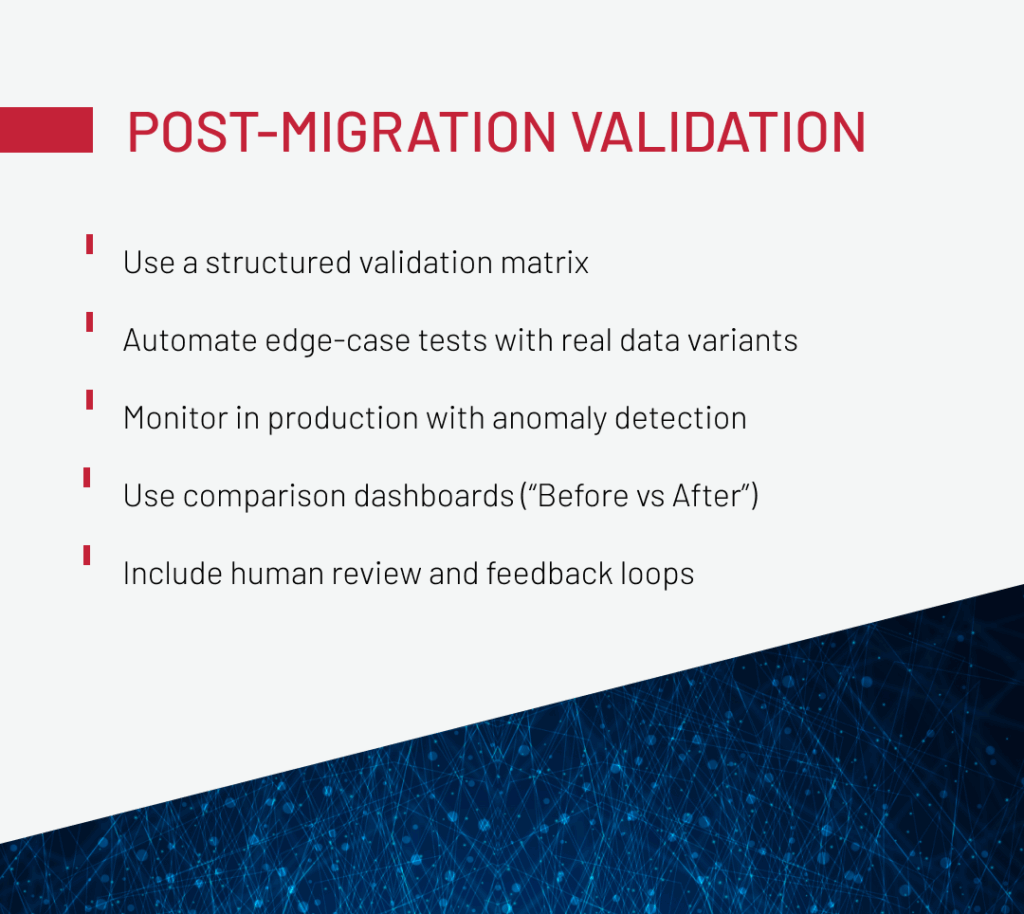

Validating Integrity Post-Migration

One more tempting misconception, one that can cause problems if not looked at closely, is believing that a clean migration log guarantees data integrity. In reality, you still need to confirm that everything works well in real-world conditions. This is when actual users and business logic begin interacting with the new system, and that’s when you can truly see if all the pieces are working as they should.

Use a structured validation matrix

A validation matrix helps verify data across field values, relationships, business logic, and UI presentation.

A structured validation matrix is key to ensuring data integrity. For example, during a migration for a global beauty retailer, customer records were validated by testing how unique and non-unique IDs behaved across legacy and new systems. In another case involving a regional omnichannel brand, merged logs from both platforms during a parallel run ensured order continuity and helped isolate integrity issues before final cutover.

Automate edge-case tests with real data variants

Synthetic test cases catch structure-level errors, but edge-case failures appear only when simulating actual user behavior. Test personas built from anonymized behavioral logs can simulate flows like partial returns, delayed promotions, or loyalty tier downgrades. Comparing outcomes in the new system with historical baselines exposes logic drift.

In a migration for a health and beauty brand we worked with, loyalty point mismatches only surfaced during a specific simulation. The issue appeared when a user added products to the cart just before a time-based promotion became active, which is something standard QA never covered. Tools like Postman, Selenium, or Groovy scripts help automate these flows for continuous validation.

Monitor in production with anomaly detection

Some issues only show up under real load, and that’s why real-time monitoring right after go-live is key. For example, spikes in loyalty points, missing attributes, or a sudden increase in support tickets can point to deeper issues.

In one case, after migrating to a streaming architecture for a global financial analytics platform, the team used embedded metrics and dashboards to catch schema mismatches and payload anomalies during high-volume exports.

Use comparison dashboards (“Before vs After”)

Parallel outputs from legacy and new systems allow for direct comparisons. Tracking record counts by entity and the distributions of values, such as order statuses or discount types, can show inconsistencies that testing may have missed.

Additionally, monitoring behavioral metrics, such as weekly signups or average order value, helps find issues that might have been overlooked. This is especially effective in high-volume environments where even small deviations can compound quickly.

Include human review and feedback loops

Automated checks catch structural issues, but business teams are better at spotting functional misalignment. In one post-launch scenario for a large consumer retailer, tax logic was technically correct but misapplied due to region-specific rules. Product bundles also broke due to pricing structure changes that weren’t reflected in QA scenarios.

To catch this type of drift, assign domain owners to review real records and run structured UAT with frontline teams: store associates, support agents, or logistics managers. Maintain feedback channels via Slack, flagging tools, or internal trackers to ensure issues are captured and resolved with context.

Final Thoughts

Data integrity during migration requires both precise engineering and strong strategic oversight. Safeguarding it means asking the right questions, building checkpoints into the process, and assigning ownership of complex domains to people who can manage them effectively.

A legacy system migration should keep data in the new environment just as correct, current, and trustworthy as before. With clear responsibilities and a well-structured approach, the migration becomes a smooth transition that also strengthens the foundation for long-term stability and business growth.

If you’re navigating a high-risk migration and want to ensure your data behaves as expected in the new environment, we’re happy to walk you through how we structure for integrity at scale. Reach out to us.

FAQ

-

What are the four types of data migration?

There are four primary types of data migration: storage, database, application, and business process. Each comes with its technical challenges, data formats, and integrity risks. Handling them effectively includes using approaches that preserve continuity, maintain compatibility, and ensure the data behaves as expected in the target system.

-

How to extract data from a legacy system?

To extract data from a legacy system, use ETL tools, database exports, or direct SQL queries. However, raw extraction isn’t enough. You must also document semantic meaning and validate dependencies to ensure the data still performs its intended function in the new environment.

-

What is legacy data transfer?

Legacy data transfer is the process of migrating historical or mission-critical data, structured or unstructured, from outdated systems into modern platforms. This includes preserving data relationships, cleaning inconsistencies, and maintaining business logic to ensure long-term usability and regulatory compliance post-migration.

With extensive experience in large-scale system transformations, Andreas Kozachenko, Head of Technology Strategy and Solutions at Expert Soft, provides strategic insights into maintaining data integrity during complex legacy system migrations.

New articles

See more

See more

See more

See more

See more