Reducing SAP Commerce Deployment Time: Tips and Best Practices

Feature delivery speed is a critical factor in enterprise ecommerce competitiveness, particularly in markets where timing directly affects revenue and customer adoption. Industry studies show that first-to-market brands capture up to 7.5% higher market share. This shows what happens when releases slip: it becomes harder to react to market opportunities before competitors do.

While deployment practices have matured to handle microservices and complex architectures, real-world enterprise projects often bring unique constraints. Consider a multi-regional SAP Commerce implementation supporting multiple European ecommerce brands from a single shared codebase. Each brand has independent release schedules and mixed technical implementations, from modern headless Spartacus to legacy JSP. Standard CI/CD logic encounters additional challenges: competing changes across brands, cross-brand bug risks, complex release coordination, and extensive testing requirements.

This article examines deployment practices and services developed while managing exactly this type of architecture — strategies for maintaining deployment velocity when architectural complexity is the default.

Quick Tips for Busy People

These are the most important points about managing deployment in complex SAP Commerce architectures.

- Architectural complexity demands adapted deployment processes: shared codebases and parallel teams require isolation mechanisms that standard linear flows don’t provide.

- Managing parallel development requires isolation and governance: separate release branches, feature toggles, and clear ownership prevent teams from breaking each other’s work.

- Resource allocation drives deployment speed: automated pipelines and risk-based testing focus effort where it matters most.

- Production environments expose what testing can’t: real data volumes and transaction loads reveal issues invisible in test environments.

- Deployment reliability supports operational efficiency: predictable processes reduce release-related disruption, though other factors still influence business outcomes.

Let’s start with how SAP Commerce cloud deployment works in standard configurations.

The Real Shape of SAP Commerce Deployment

Understanding how SAP Commerce deployment works in typical setups provides the baseline. From there, we can see exactly where more complex architectures diverge.

The baseline deployment flow

SAP Commerce Cloud operates on a Git-centric model where the repository serves as the single source of truth for all deployment activity. Every meaningful change, including code and versioned configuration, flows through version control first. The Cloud Portal acts as the build and deployment orchestrator, automatically executing builds, running unit tests, and preparing artifacts without manual intervention.

The environment chain follows a familiar pattern:

- Dev environment, where development happens, automated tests run, and teams validate changes before they move forward.

- UAT for regression testing and business verification.

- Production — the live environment where customers interact with the platform, receiving validated releases deployed through rolling updates.

Changes move linearly through this chain. A commit triggers an automatic build, successful builds deploy to dev, passing dev tests enable scheduled UAT deployment, and validated UAT artifacts reach production. Rolling updates handle the actual production deployment, gradually replacing instances to keep the platform operational throughout the process.

The entire model assumes a unified system shipping linear releases. When architectures involve multiple independent teams with parallel release streams and competing priorities, the linear model no longer holds.

Specifics of deployment in the project with the shared codebase

When a single organization operates multiple ecommerce brands, the traditional approach is for each brand to run on its own separate platform. Some companies take a different approach, running multiple brands on a shared codebase. We at Expert Soft are working on one SAP Commerce implementation supporting multiple health and beauty retail brands across different European countries. Each brand serves distinct markets with unique products, but they leverage a shared technical foundation.

The business rationale makes sense: build core ecommerce functionality once, let each brand customize it for its specifics, and new brands can leverage tested code and feature implementations, adapting them to their specific requirements instead of building from scratch.

This efficiency comes with deployment complexity. Code entering the shared master can break all downstream brands when they pull updates. Technical maturity varies across brands: some migrated to headless Spartacus while others still run on legacy JSP architecture. A change that works perfectly for one brand’s architecture might break another’s older implementation.

The deployment process for this architecture layers complexity onto the baseline SAP Commerce flow:

-

Independent release streams through stabilization branches:

each business unit (BU) creates its own stabilization branch to isolate and test the changes during the 30-day release cycle, ensuring that ongoing development from other teams doesn't interfere with their path to production.

-

Parallel build pipelines:

the standard SAP Commerce build process runs independently for each active branch, allowing multiple business units to build and validate their changes simultaneously without blocking each other's development cycles.

-

UAT as a major cross-BU defect discovery layer:

daily deployments expose most cross-BU issues through business user testing of actual workflows.

-

Parallel production releases:

multiple business units can deploy independently to production after UAT validation, rather than sequential releases.

-

Hotfix branches with abbreviated cycles:

critical bugs bypass normal stabilization through emergency branches that go straight to UAT, then production, then merge back upstream.

-

Configuration as code:

feature toggles and settings follow the same Git branching and deployment pipeline as application code.

This creates a multi-dimensional deployment process with parallel release streams, each with independent testing cycles and production schedules.

Our whitepaper covers strategies for building scalable data retrieval when your stack spans multiple platforms, legacy systems, and modern architectures.

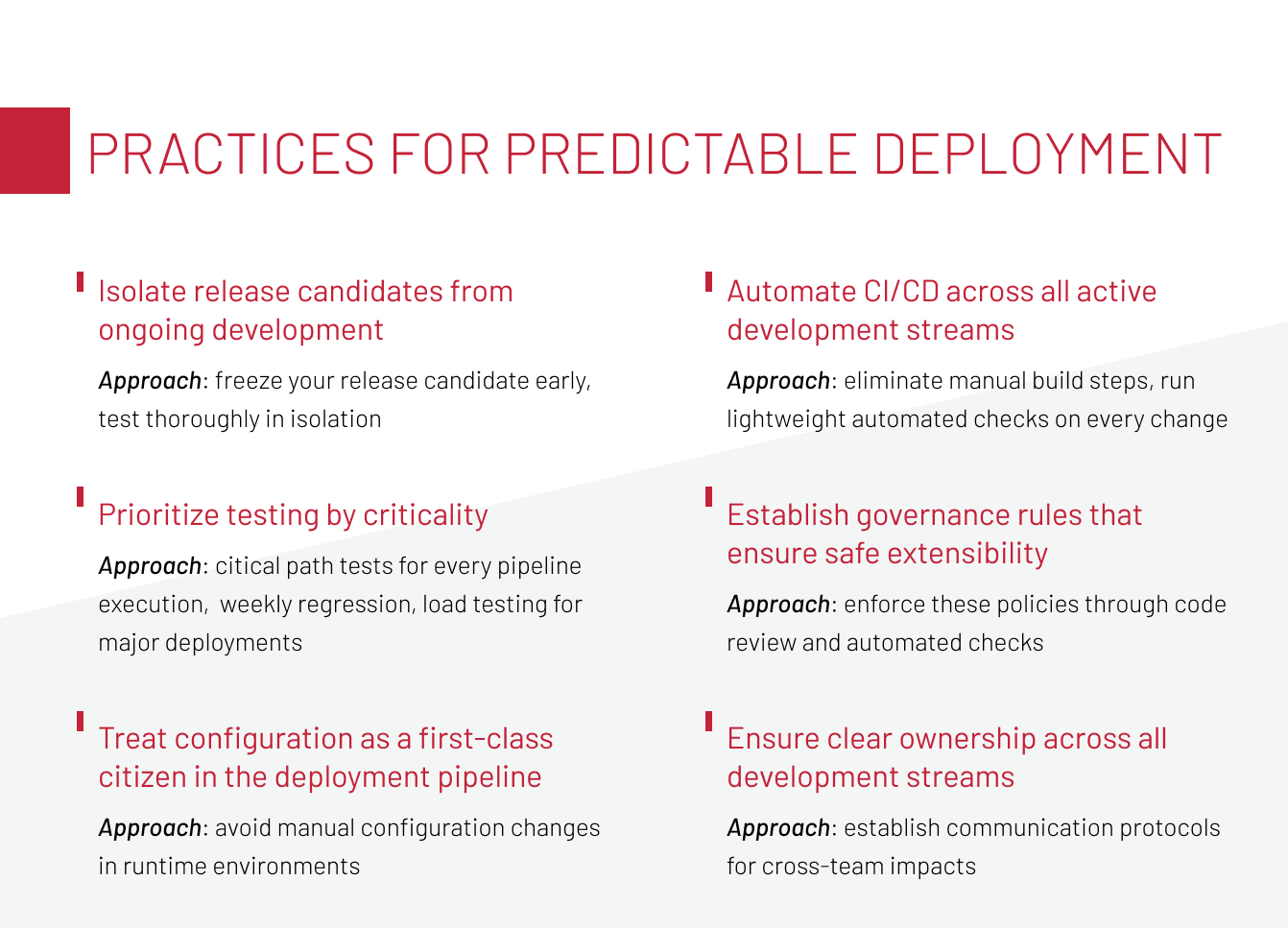

DownloadPractices for Predictable SAP Commerce Deployment

The complexity we just outlined demands structure. These practices emerged from managing deployment across shared codebases, but their value extends beyond that specific architecture. Whether you’re managing multiple teams, frequent releases, or complex dependencies, these practices can help you reduce deployment uncertainty.

Isolate release candidates from ongoing development

Active development never stops, but releases need stability. When new commits continuously flow into your release branch, you’re chasing a moving target. Testing reveals a bug, you fix it, but by then three new features have merged in, each potentially introducing new issues. Release dates slip because the scope keeps expanding.

-

In our project

teams create independent stabilization branches from the shared codebase once a month. During the 30-day stabilization window, only release-relevant changes enter that branch without any external updates from other business units. This isolation reveals hidden bugs introduced by parallel development and allows thorough test cycles. Even with multiple teams working simultaneously, the dedicated release stream keeps dates manageable and outcomes predictable.

-

Practical approach

freeze your release candidate early, test thoroughly in isolation, and only merge back to master when confidence is high.

Prioritize testing by criticality to accelerate deployments

Running your entire test suite on every change creates delays. Developers wait hours for feedback while comprehensive regression tests validate edge cases that rarely break. The risk is burning time and capacity on low-priority scenarios while slowing feedback loops that developers actually need.

-

In our project

critical scenarios like product search, cart additions, and checkout run after every merge. These "happy path" tests cover the flows that are critical for business operations. Extended functional checks, like gift workflows, alternative payment methods, and large product volumes, run less frequently. Performance testing under high load happens weekly or bi-weekly. Before peak sales events like Black Friday, testing intensifies with stricter code freezes and mandatory load testing.

-

Practical approach

map your test suite to business impact. Automate critical path tests to run as part of every pipeline execution, schedule comprehensive regression for weekly or pre-release cycles, and reserve load testing for major deployments or scaling for seasonal peaks.

Treat configuration as a first-class citizen in the deployment pipeline

As code that gets reviewed, tested, and versioned, configuration should follow the same discipline. Without version control and testing, configuration changes create risk: manual updates conflict with deployments, environments drift out of sync, and debugging becomes harder when changes aren’t tracked. Treating configuration as code, when you store it in Git and run it through the same CI/CD pipelines, eliminates drift and ensures consistent behavior across environments.

-

In our project

critical scenarios like product search, cart additions, and checkout run after every merge. These "happy path" tests cover the flows that are critical for business operations. Extended functional checks, like gift workflows, alternative payment methods, and large product volumes, run less frequently. Performance testing under high load happens weekly or bi-weekly. Before peak sales events like Black Friday, testing intensifies with stricter code freezes and mandatory load testing.

-

Practical approach

avoid manual configuration changes in runtime environments. Use feature flags with safe defaults to control rollout across environments and brands.

Automate CI/CD across all active development streams

For predictable deployment, every change, regardless of branch, team, or release cycle, must flow through a unified, automated build and test pipeline. This eliminates human error, accelerates feedback loops, and ensures release stability even during high development activity. Automation becomes critical because manual processes simply don’t scale when multiple teams work in parallel or release cycles overlap.

-

In our project

every change entering an active development branch triggers a standardized CI pipeline that builds the application, runs lightweight in-memory tests, and deploys the result to the DEV environment when checks pass. This consistency keeps parallel development streams predictable without teams blocking each other.

-

Practical approach

configure your CI/CD to apply a consistent baseline pipeline across all active branches. Eliminate manual build steps, run lightweight automated checks on every change, and automatically deploy validated builds to development environments for further testing.

Establish governance rules that ensure safe extensibility

Predictable deployment requires mechanisms that let you introduce changes safely, in isolation, and without breaking existing functionality. Without clear rules for safe extensibility, teams either move slowly out of fear or break things regularly. This becomes critical in shared codebase environments where one team’s feature can impact another team’s production deployment, as governance rules ensure changes remain isolated and reversible.

-

In our project

any new feature must be developed in a disabled state by default and include documentation enabling other business units to activate it safely. Feature toggles serve as isolation. New functionality exists in the codebase, but it causes zero impact until explicitly enabled. Before code reaches the shared master, it passes automated testing.

-

Practical approach

define and document clear governance policies for extending the platform, including rules for feature isolation, default states, rollback expectations, and ownership. Enforce these policies through code review and automated checks to ensure changes remain safe, isolated, and reversible across teams.

Ensure clear ownership across all development streams

Every change needs a clear owner — someone responsible for testing it, monitoring it after release, and fixing issues when they surface. Without explicit ownership, incidents turn into investigation cycles: teams debate who introduced the problem while customers experience issues and resolution gets delayed. In shared codebase environments, ownership becomes even more critical. When one team’s changes can break another’s deployment, clear accountability ensures fast communication, quick fixes, and collaborative problem-solving instead of blame and confusion.

-

In our project

teams operate under strict governance principles, one of which implies that changes must not break other business units' functionality. When cross-BU issues occur, established escalation protocols define communication channels and resolution ownership. Because affected teams typically discover issues during their own testing cycles, resolution often becomes collaborative, with both parties contributing to the solution based on their respective contexts.

-

Practical approach

define ownership boundaries clearly for each development stream, establish communication protocols for cross-team impacts, and create accountability for downstream effects.

Download our playbook covering assessment, planning, execution, and post-migration optimization for SAP Commerce Cloud migrations.

How to Reduce Downtime Risks in SAP Commerce

Downtime in SAP Commerce falls into two categories. The first happens during deployment itself, particularly when database changes are involved. For example, a query that executed cleanly on UAT locks up when it hits millions of customer records and active transactions.

The second category is functional downtime, when critical functionality breaks, but the platform technically runs. For example, when search functionality fails, the business may decide to temporarily create an artificial downtime, rather than leaving end-users to deal with partially working functionality.

Both downtime categories stem from the same root cause: production environments behave differently from test environments. The sheer volume of data and concurrent transactions creates conditions you can’t replicate in testing. This gap between test and production environments makes extended testing critical.

UAT environments run multi-week test cycles focusing on the 90% most critical user scenarios. Load testing supplements this functional testing, though it can happen less frequently. Effective load testing requires:

- Testing baseline performance: test system speed under normal conditions before applying a load to establish a performance baseline.

- Building accurate load profiles: model realistic traffic patterns, including peak loads, sustained loads, and variable loads that reflect actual user behavior.

- Following iterative load increases: start with 10 users, scale to 100, then to 10,000+, catching failures at each stage rather than overwhelming the system immediately.

- Monitoring asynchronous operations: track background tasks like message queues that can silently build backlogs invisible in API response times.

- Testing beyond surface metrics: examine database connection pools, query execution time, and indexing efficiency, not just overall response times.

One highly effective, but often expensive, way to reduce downtime risk is to use a pre-production environment that closely mirrors production in scale and configuration. By operating under near-real conditions, such environments expose many of the issues that typically surface only in production. However, not every project can afford this approach.

In SAP Commerce Cloud, for example, provisioning and maintaining additional environments come at a high cost, making pre-production a deliberate investment. The decision ultimately comes down to a trade-off: does the potential cost of downtime outweigh the cost of running a pre-production environment? The answer to this question can help guide the decision.

Our team has deep experience with complex architectures and shared codebase challenges. Get in touch to discuss how we can help improve your deployment predictability and reduce time-to-market.

Let’s TalkHow Smarter Deployment Accelerates Ecommerce Growth

Predictable deployment cycles support faster time-to-market. When teams can reliably estimate deployment timelines, businesses gain flexibility in planning feature releases around seasonal windows, promotional campaigns, or competitive responses. This doesn’t guarantee market success as product-market fit, pricing, and execution all matter, but unpredictable deployments remove timing as a controllable variable. Besides this, predictable deployment:

-

Reduces the release-related operational costs.

Fewer emergency hotfixes cut unplanned development work, while controlled rollouts reduce blast radius and speed up recovery. As deployments become predictable, release-related support load decreases and teams regain capacity for new features.

-

Reduces deployment-related downtime risk.

Downtime directly impacts conversion rates and order volume. Stable deployments during peak periods preserve the revenue spikes that define quarterly performance.

-

Increases team operational efficiency.

Stable and predictable deployment processes reduce unplanned work, minimize release-related interruptions, and shorten recovery time when issues occur. As teams spend less time firefighting deployments, they can focus more consistently on delivering new features and improving existing functionality.

Let’s Summarize

SAP Commerce deployment in complex architectures requires practices that go beyond standard guidance. When multiple teams work in parallel with independent release schedules, or when architectural constraints demand cross-system coordination, linear CI/CD models break down. The practices outlined in this article address these specific complexities. They reduce deployment time, minimize deployment-related downtime risk, and make releases predictable even when architectural simplicity isn’t an option.

Managing deployment in complex SAP Commerce architectures raises questions standard documentation doesn’t answer. Our team has worked on these types of implementations and can help optimize your deployment process for reliability and speed. Let’s talk!

At Expert Soft, Andreas Kozachenko oversees technology strategy for complex SAP Commerce initiatives. His background in large-scale ecommerce delivery informs practical recommendations for reducing deployment time without sacrificing system stability or quality.

New articles

See more

See more

See more

See more

See more