How to Calculate Your True Database Costs

How great it would be if managing database costs included only keeping an eye on your cloud bills, license usage, and backup snapshots. But reality shows that it’s only part of the picture. The bulk of the database spend comes from everything around it: infrastructure, integrations, disaster recovery, and internal engineering.

Findings show that global spending on compute and storage infrastructure for cloud deployments reached $31.8 billion in 2023, and much of that growth came from shared infrastructure and operational overhead rather than core database engines.

And in this mix of rising infrastructure and operational complexity, businesses often miss the less visible cost contributors. Working with high-load enterprise systems, we at Expert Soft have seen how these costs spiral.

In this guide, together with Expert Soft engineers, we’ve compiled a list of often-overlooked drivers of the database price, bringing clarity to what really drives up spend and how to keep it under control.

Quick Tips for Busy People

Short on time? Here are 7 high-impact database pricing factors to watch for as your system scales:

- Production-scale staging: full-size staging environments can double or triple your storage and compute spend.

- Active-active disaster recovery: always-on DR setups effectively duplicate your infrastructure even when idle.

- Overprovisioned throughput: some services, such as Cosmos DB, use provisioned RU/s (Request Units per second), and you’re billed even if usage doesn’t reach provisioned capacity.

- BI/ETL query overhead: poorly timed or designed data integrations can bypass caching and spike IOPS.

- Legacy migration effort: updating old database versions can cost weeks of engineering time in schema rewrites and ORM refactors.

- Inefficient queries: patterns like N+1 inflate CPU and latency, especially painful under high load.

- Monitoring sprawl: logging, metrics, and audit trails grow fast, and retention mismanagement can burn storage budgets.

Let’s break down where these costs come from and how to keep them under control.

Main Cost Sources and Their Hidden Multipliers

How much does a database cost? When evaluating this, it’s crucial to look beyond the obvious expenses. Understanding these elements can lead to more effective budgeting and optimization strategies.

Evident costs

Licensing and cloud database fees are familiar territory. Your team likely tracks what you pay for Oracle, SQL Server, or managed services like Amazon RDS. Managed platforms add another layer, charging separately for computing, storage, data transfer, backups, and snapshot exports. These are default conditions for most systems, and without strict governance, they quickly become cost drivers.

Relying on the info from Cloudchipr, Amazon Web Services, CloudZero, etc., the balance of the main database cost drivers can look like this:

- Compute resources: ~35–45%

- Storage costs: ~20–25%

- Backup and snapshot costs: ~10–15%

- Data transfer costs:~5–10%

- Licensing costs: ~10–15%

- Operational and management costs: ~5–10%

The actual figures vary depending on workload, architecture, and vendor agreements.

Less obvious costs

While infrastructure is a rather evident cost factor, it’s not the only one, as a substantial portion of spending comes from day-to-day engineering work. DevOps or DBA can spend dozens of hours each month tuning slow queries, chasing down deadlocks, and keeping monitoring tools in check. And with legacy migrations, for example, from PostgreSQL 9 to 15, rewriting logic and validating schema changes can sometimes take hundreds of hours.

And at scale, even one inefficient query pattern (like an N+1 join) can introduce a major load. At 1,000 RPS, ~40ms of added latency translates to 30% CPU usage on a c5.large — entirely from a single unoptimized line. These inefficiencies directly inflate compute, IOPS, and memory costs.

Operational Costs – What You Don’t See on the First Bill

As you know, most long-term costs emerge later. Below, we break down the operational costs that are easy to overlook but impossible to ignore once your system grows.

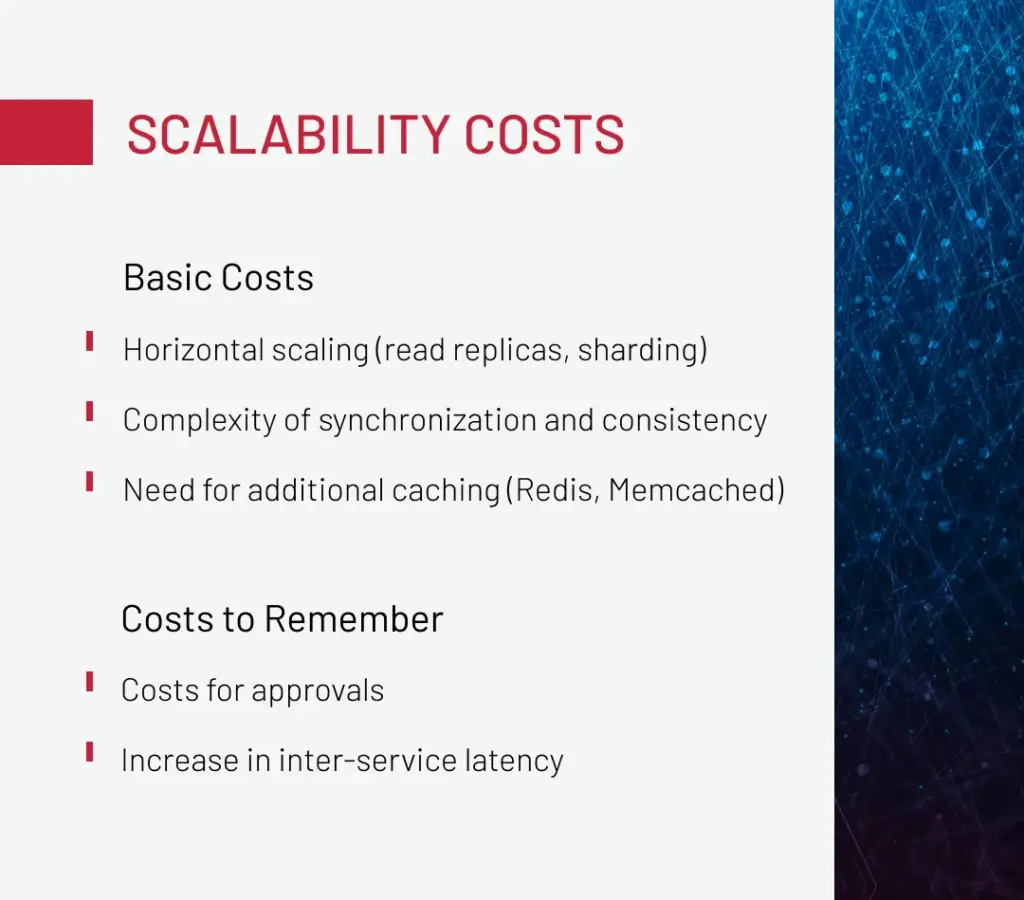

Scalability: The cost of growth

Scaling a database infrastructure is rarely as simple as adding a few read replicas. Intending to a well-designed at a first glance scalability adjustment, you may end up with introducing workarounds, duplicated logic, and dependencies.

Scaling horizontally with read replicas or sharding may reduce read pressure, but it also adds up coordination overhead, consistency challenges, and region-specific latency issues. To offset these, teams layer in Redis or Memcached, reducing DB load but introducing additional infrastructure to monitor, tune, and scale.

Each added layer brings indirect costs: more alerts, more engineering time, more edge cases and none of it is free.

The bigger expenses, however, are often the hidden ones. For example, when migrating to a shared setup from a monolithic database, you should introduce a new control layer to manage shard maps, schema versioning, and failover logic.

As you transition to a microservice architecture, service-to-service communication increases, amplifying network latency and coordination overhead. At the same time, caching logic becomes harder to manage: invalidation now spans services and domains, making patterns like invalidate-by-query significantly more complex and error-prone. These challenges introduce real operational costs as systems scale.

One common pitfall is optimizing for reads and assuming that alone will carry the system. Replication helps with read-heavy workloads, but if your system is write-intensive — think logging, transactions, or order processing — read replicas won’t help. Writes still hit the primary node, and as volume grows, performance degrades fast.

Scaling writes means implementing partitioning, which demands a deeper re-architecture and significantly more engineering investment.

Cut costs, boost performance, and avoid expensive re-architecture traps. Let’s audit your setup and unlock real savings.

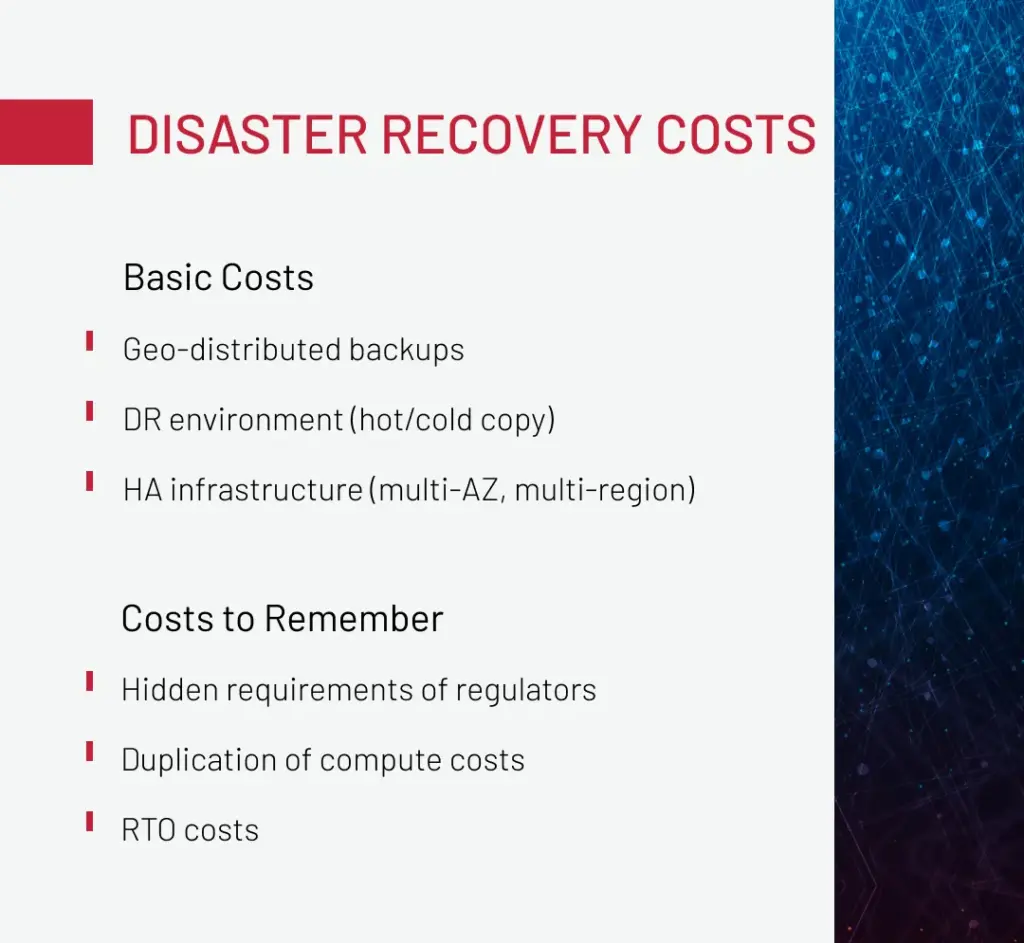

Disaster recovery costs

Disaster recovery (DR) isn’t limited to backups, including replicated environments (whether cold or warm standby) and high-availability infrastructure spanning multiple availability zones or regions. But the cost drivers don’t stop there.

Many compliance frameworks, such as ISO/IEC 27001 or SOC 2, require routine restore tests and validation procedures. This adds engineering overhead through scripting, automation, and manual verification. Additionally, active-active setups mean you’re paying for a fully duplicated infrastructure: compute, storage, and networking, regardless of the actual load. In reality, you’re funding a second environment around the clock.

Finally, recovery time objectives (RTOs) are frequently underestimated as a cost factor. The faster you need to recover, the more expensive your setup becomes. A warm standby costs significantly more than a cold backup, but for many businesses, delayed recovery isn’t an option.

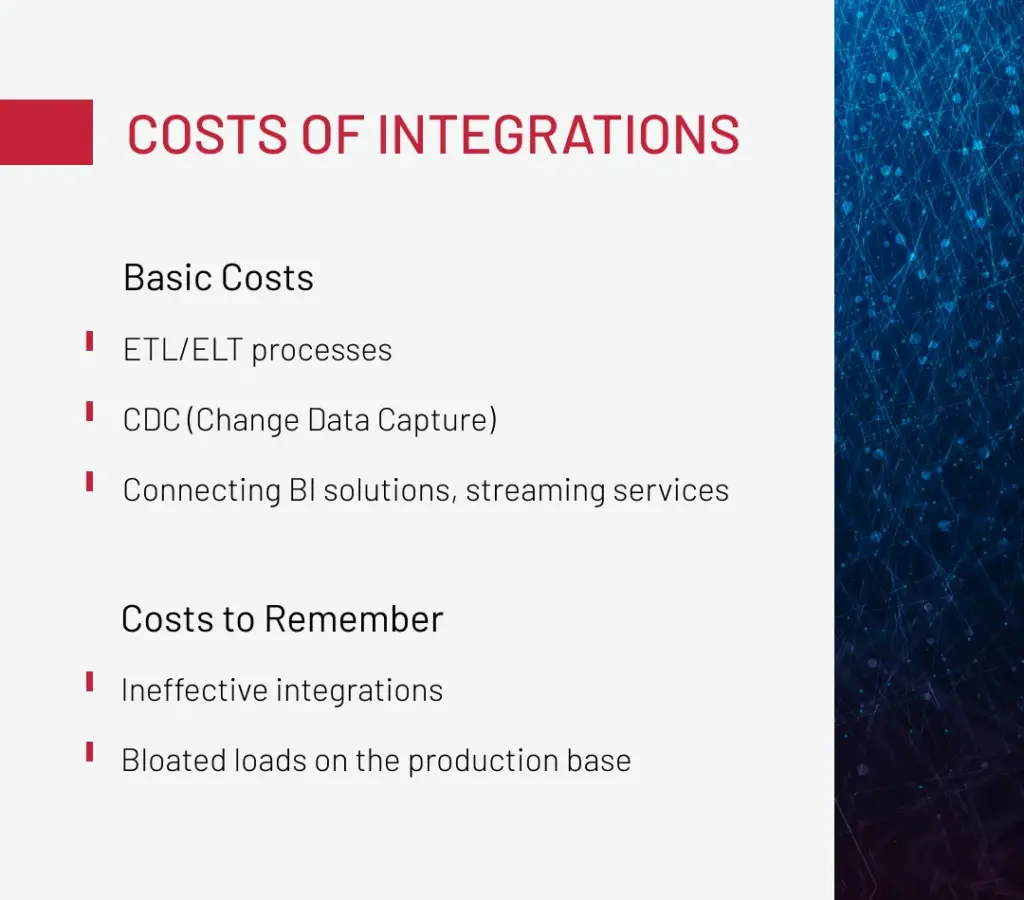

Costs of integrations

Ecommerce integrations are often treated as routine, but they’re also quietly eroding bloated infrastructure costs. On the surface, the direct expenses are clear: ETL/ELT pipelines, change data capture (CDC) processes, and connections to BI tools or streaming platforms.

When connecting to production databases via APIs or direct JDBC/ODBC connections, third-party systems often issue duplicate queries that bypass caching and increase IOPS. Additionally, when ETL jobs run during peak hours, they can lead to query contention, latency spikes, and resource throttling. These problems are frequently misattributed to application performance issues.

Poorly designed integrations can also disrupt cache coherence. Even a single non-cacheable query pattern may invalidate large data sets, triggering unnecessary recomputation and adding further strain to the system.

Taken individually, these challenges may seem manageable, but together, they create a layered cost structure that scales faster than your system. That’s why understanding operational impact is just as critical as estimating infrastructure spend.

What Else to Consider When Calculating

As we’ve already mentioned, some cost factors don’t surface until your system is under real-world pressure. We’ve kept some more factors you should keep in mind when calculating true database costs.

Data retention & archiving

Old logs, archived transactions, and historical change records tend to accumulate, especially in systems without TTL (time-to-live) policies. However, these not-actively-using data can still incur ongoing costs.

Cloud providers don’t analyze the type of data carried, so whether they are hot or cold, systems still charge for both storage volume and IOPS. And when historical data isn’t offloaded or archived properly, remaining in high-performance (primary) storage tiers, those costs can escalate fast.

That’s why you need clear data lifecycle policies, not to add weight to your infrastructure bill with no operational benefit.

Observability

Monitoring tools like Prometheus, Datadog, or New Relic are essential for visibility, but they’re not free from impact. The observability stack itself generates additional system load, especially when using exporters for detailed database metrics.

Beyond runtime overhead, observability brings data persistence requirements: storing slow query logs, audit trails, and performance metrics demands both storage space and careful retention management. Left unchecked, your monitoring setup can become one of the more expensive and most overlooked components of your database architecture.

Multiple environments

Test and staging environments often use full production-scale database dumps, and that’s where the cost multiplies. When each environment holds a complete copy of the production database, storage and compute costs can easily triple without adding real value.

We’ve seen setups where dev, staging, and prod each run identical instances, with PostgreSQL replicas stored across separate cloud subscriptions. The result was a threefold increase in storage costs, plus the overhead of computing and backups for non-critical environments.

Switching to masked sample data or smaller, purpose-built datasets can reduce this overhead without compromising test coverage or QA workflows. But without planning, multi-environment setups become a silent cost amplifier.

Let’s Calculate

Consider a mid-stage SaaS company expanding its global footprint.

Initial setup: the platform launches with PostgreSQL deployed in a single region, managing approximately 50 GB of data on an RDS t3.large instance, costing around $150 per month.

After 12 months of growth, the company has:

- Implementation of regional sharding across the US, EU, and APAC.

- Deployment of three read replicas to enhance availability and reduce latency.

- Migration to Aurora Global for cross-region access and latency optimization.

- Establishment of a warm standby disaster recovery environment.

- Provisioning of staging and test environments using full production-scale datasets.

- Integration with BI tools and ETL pipelines for reporting and analytics.

All these changes result in $7,000+ adding up in monthly cost.

Notably, over 60% of this total is not attributed to the core database engine. It comes from the surrounding infrastructure: disaster recovery, multi-environment operations, integration pipelines, and the engineering effort required to maintain them.

Download our free whitepaper, “Smart Ways to Lower Ecommerce Infrastructure Cost,” and discover proven, field-tested strategies trusted by leading engineering teams.

Get WhitepaperThe Bottom Line

Managing infrastructure costs goes beyond compute and storage line items. It requires understanding how architectural choices shape scalability, performance, and long-term efficiency. Redundant environments, unoptimized DR, and poorly planned integrations can all drive database prices higher than expected.

Expert Soft has been helping teams uncover and address these inefficiencies through smarter architecture, eliminating waste while keeping your systems fast, resilient, and ready to grow. If you’re facing rising infrastructure costs or planning your next scaling phase, get in touch with us to see how we can help streamline your setup and future-proof your growth.

FAQ

-

How to calculate a database?

To provide an accurate database cost estimation, consider factors beyond direct infrastructure, including disaster recovery setups, observability tooling, BI query loads, and the engineering hours spent on tuning, migrations, and issue resolution. Only then will you get a complete, realistic cost picture.

-

What is cost estimation in DBMS?

Cost estimation in DBMS is the process of forecasting both technical and operational expenses, including infrastructure, licensing, maintenance, scaling efforts, disaster recovery, and ongoing engineering work, required to run a database system efficiently over time.

Alex Bolshakova, Chief Strategist for Ecommerce Solutions at Expert Soft, advises enterprises on cost-effective technology choices. Her strategic perspective helps businesses uncover hidden database expenses and align infrastructure investments with long-term ecommerce goals.

New articles

See more

See more

See more

See more

See more