Best Practices for Scaling an Ecommerce Database Without Performance Bottlenecks

As ecommerce systems grow, with more users, more SKUs, and more integrations, performance issues often appear in the database first. Many teams rush to scale infrastructure as system load increases, but have you considered what exactly is driving that load? Is it just more users and SKUs, or could there be deeper inefficiencies at play?

In our experience, it might be the data structure, not just hardware limits, that becomes the real bottleneck for growing platforms. And when scaling, these inefficiencies creep in further, significantly reducing the effectiveness of your efforts.

For scaling databases to be effective, a good rule of thumb is not to focus just on how much the data grows, but to start by analyzing your current structure: where data accumulates and how it moves.

That’s why in this article, we’ll discuss what we usually examine when scaling the ecommerce databases of our clients to identify the best approach with no performance bottlenecks.

Quick Tips for Busy People

For those in a rush, here are the core points to remember:

- High load isn’t the only thing that impacts: poor schemas and high data volume often work together to slow systems down.

- Scaling tactics are part of the solution, but not the first step: before adding replicas or sharding, optimize how data is modeled, stored, and accessed.

- Structural issues compound over time: missing composite indexes, default field localization, and years of unarchived records increase query latency and I/O load.

- Strategy must match workload: scale differently for reads, writes, latency, and integrations.

And now let’s get down to details.

Why Scaling Often Fails

Scaling problems don’t always start with traffic spikes or sudden growth. They can build up through how data is stored, how queries are written, or how much outdated information is left in the system.

Inefficient data models and queries

Sometimes, a cause of scaling issues might be the data model. Schemas packed with unnecessary localization, too many joins, and poorly organized fields can overwhelm the system with extra query load.

In one ecommerce platform selling medical equipment, product attributes were localized by default, even when most fields didn’t require it. This resulted in thousands of duplicate records, heavy join operations, and regular memory and I/O slowdowns during busy periods, with page load times exceeding 5 seconds.

Expert Soft’s team redesigned the data model by separating localized and static fields and caching frequently accessed data. As a result, query volume dropped by 100 times, load times went below 90 milliseconds, and overall resource usage decreased.

-

Takeaway:

rethinking data granularity can yield more than just adding more computing power to a flawed schema.

Historical data accumulation

Databases face issues not only from the volume of data, but also from their age. As time goes on, historical records accumulate and burden systems that are meant for real-time use. Order history, return logs, and old transactions might be necessary for compliance or reporting, but they do not belong with active business logic.

When we worked for a large jewelry brand platform, years of order and return data were kept in the main operational database. This gradually slowed down internal tools. Search queries took longer, filters became slow, and customer service faced delays due to a poor user interface.

We put in place a tiered archival strategy. They flagged orders older than a certain threshold using custom reports and moved them to a secondary datastore designed for infrequent access. This way, we achieved better report generation, easier back office navigation, and a much leaner database.

-

Takeaway:

a proactive data lifecycle strategy often outperforms adding more hardware.

Unoptimized indexing

As systems develop, their query patterns change. Unfortunately, indexing strategies don’t always keep pace. When indexes no longer match how data is accessed, performance suffers. This happens because of oversight, and scaling won’t really help.

During a cloud migration, one ecommerce platform realized that key indexing strategies had not been updated to fit the system’s new data access logic. Cron jobs began to stall during peak traffic times, and search queries managed by Solr slowed down and became less reliable. Data pipelines lagged, especially during campaign pushes.

A full-stack audit conducted by the DevOps and product teams provided a solution. So, we added missing indexes, moved cron job execution times to off-peak hours, and restructured search documents for more efficient querying.

-

Takeaway:

indexing must change along with your data access patterns because old indexes can hurt performance as your system grows and changes.

Poor data lifecycle management

Not all data is the same, and some of it shouldn’t stick around for long. Temporary records like abandoned carts or recently viewed items often go unmanaged, piling up until they negatively affect performance. It’s easy to overlook this data because it seems low-value, but it has a big impact when added together.

A global beauty brand dealt with increasing delays in its cart and personalization endpoints. The obvious move would be to think insufficient resources, but actually it was a bloated database. Millions of old session records, including expired carts and outdated product views, had never been removed.

To solve this, the team set up automated cleanup routines. Empty carts were deleted after a certain time, and outdated personalization data was removed through scheduled jobs. The results were immediate: faster response times, less storage use, and a leaner, more reliable system.

-

Takeaway:

temporary data needs regular cleanup. If it doesn’t get cleaned up, it turns into technical debt.

As you can see, the real challenges in scaling often don’t lie in the strategy itself, but in how data is organized and stored in the current system. That said, the choice of scaling strategy still plays a crucial role.

Common Scaling Strategies and Their Pitfalls

When response times increase and dashboards lag, teams often turn to database scaling strategies. They might upgrade instances, add replicas, or shard the database. These methods can be helpful, but each has its complexities, costs, and limitations.

Here’s a breakdown of six common database scalability techniques and when each strategy makes sense based on your architecture and growth patterns.

| Strategy | Description | Strengths | Pitfalls |

| Vertical Scaling | Adding more CPU and RAM to a single database instance to improve performance. | Easy to implement without changing application code or architecture. | Hits limits quickly; doesn’t address underlying schema or query inefficiencies; high cost per gain. |

| Read Replicas | Creating read-only copies of the primary database to distribute read load. | Reduces load on primary DB; ideal for dashboards, search, and reporting. | Replication lag can cause stale reads; unsuitable for real-time user-facing features. |

| Sharding | Distributing data across multiple databases, each responsible for a segment (e.g., region or type). | Scales horizontally for large datasets and write-heavy workloads; supports parallel processing. | Increases query complexity; high development & ops overhead. |

| Polyglot Persistence | Using multiple databases (e.g., SQL, NoSQL, cache) optimized for different use cases. | Enables best-fit storage per data type and access pattern. | High coordination overhead; without schema governance, risks inconsistencies and system fragmentation. |

| Caching Layers | Storing frequently accessed data in memory (e.g., Redis) to reduce database load. | Greatly improves response times for read-heavy endpoints; reduces DB queries. | Requires precise invalidation; easy to make mistakes: stale data can break pricing, UX, or cart logic. |

| CDC & Event-Driven Sync | Streaming database changes in near real-time to downstream systems (e.g., via Kafka). | Keeps search, analytics, and personalization layers synced without batch jobs. | Fragile without observability; dropped events or malformed records can silently corrupt data pipelines. |

Expert Soft helps ecommerce platforms tackle load growth, system design, and performance bottlenecks. If you’re facing similar challenges, let’s explore what would work best in your case.

Contact usHow to Choose a Scaling Strategy

The right scaling strategy depends on how and why your data grows.

| Consideration | Implication |

| Read-heavy workload | Use caching (e.g., Redis) and database read replicas to offload the primary DB. |

| Write-intensive workload | Consider sharding or event-streaming architectures to distribute write load. |

| High latency sensitivity | Focus on query optimization and local caching to reduce end-to-end delay. |

| Complex product structures | Choose a database model that supports rich relationships (e.g., SQL, graph DB). |

| Heavy batch jobs | Design for parallel execution and schedule jobs outside of traffic peaks. |

| Many external integrations | Use CDC or event-driven pipelines to propagate changes without full re-syncs. |

These are common recommendations, but the final choice still depends on your system specifics. And sometimes they can fully change the game.

Let’s see an example

A global medical tech provider handled a large and complicated product catalog. At first, sharding seemed like the obvious choice to reduce load and improve performance. However, when the team looked at the traffic, they discovered that, although the data structure was complex, the transactional volume did not warrant the operational complexity of sharding.

Instead, they chose a more focused solution. The team restructured the data model to separate localized and global fields, which reduced unnecessary joins and data duplication. They also set up caching at the application layer to eliminate repeated lookups for frequently accessed configurations. Most importantly, they maintained centralized data governance, which helped prevent operational risks and consistency problems often caused by sharded architectures.

As a result, they got better performance, faster time to value, and no need for major infrastructure changes.

The lesson is clear: complexity should be justified by real operational needs. Don’t chase complex solutions if you can use a more targeted approach with much less overhead.

Best Practices for Scaling Ecommerce Databases

The medical tech example above is a good reminder: scaling means doing things smarter. So before jumping into complex solutions like sharding or massive infrastructure upgrades, it’s worth looking at what’s actually causing the strain. Here are some of the practical approaches we’ve seen work best in high-load ecommerce projects without adding unnecessary overhead.

Our whitepaper breaks down real-world tactics that work at scale. Download now.

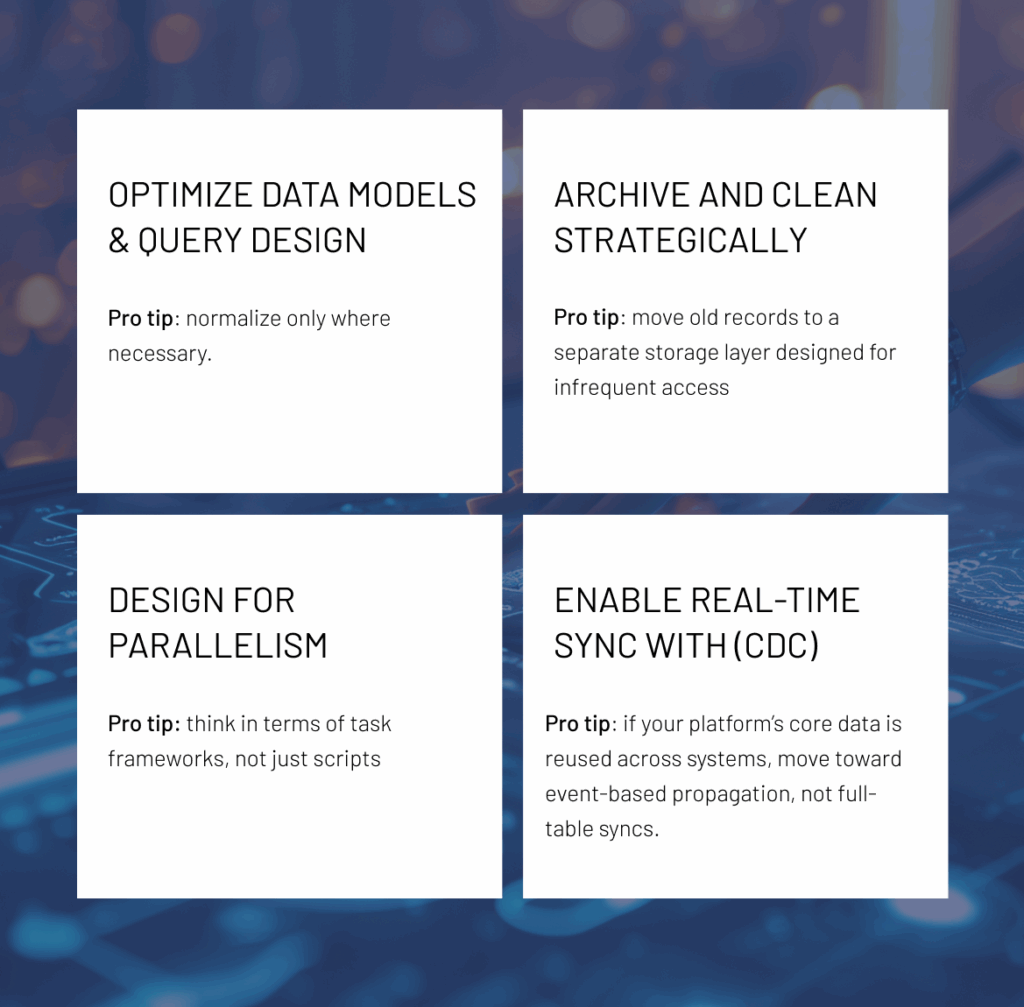

Optimize data models & query design

The way your data is structured limits your performance. Infrastructure upgrades can only help so much if the schema works against you. We’ve seen many situations where overly localized fields, too many joins, or complicated relational models start to lower performance well before traffic becomes a real issue.

However, there’s also the other side — overengineering. It happens when you’re trying too hard to cover every edge case. Focus on normalization where it truly adds value. Avoid adding flexibility no one needs and that makes no real impact.

Archive and clean strategically

Over time, data builds up, including old orders, returns, and expired carts. This accumulation can slow down systems, extend report generation times, and make even simple admin tasks frustrating.

To prevent this, establish a clear data retention strategy. Determine what information needs to remain active and what can be archived or deleted. Move old records to a separate storage layer designed for infrequent access. Regularly remove temporary data that no longer serves a purpose.

Design for parallelism and job resilience

As your product catalog and regional presence expand, background tasks like price recalculations, batch updates, and cron jobs can quietly become major performance issues if they are not designed to grow from the beginning.

One effective approach is to structure jobs for parallel execution across products, regions, or data segments. For example, in one project, a change in pricing rules required per-product calculations, causing a cron job to grow from 10 minutes to over 3 hours. By refactoring it into a multi-threaded model and distributing the workload across CPU cores and regions, we reduced execution time to under 15 minutes.

To make this scalable across other tasks, we introduced an abstract handler class to manage threads, retries, and error logging. This way, it was possible to apply this pattern to category rebuilds and other heavy operations.

Enable real-time sync with change data capture (CDC)

Batch syncs might work early on, but they don’t scale well when you’re dealing with millions of updates across search, analytics, CMS, and personalization layers. We saw this firsthand on a project where a MySQL-based system managed over 60 million records with millions of changes happening daily. The daily sync process started falling behind, leading to missed updates and sync issues under the load.

To fix it, we implemented Change Data Capture using AWS Database Migration Service. Changes were streamed directly from MySQL into Kafka, then processed by a Node.js service that incrementally updated MongoDB and refreshed the Redis cache in parallel. This setup reduced sync delays to under 60 seconds and cut CPU and I/O load, restoring both consistency and performance.

If your platform’s core data is reused across systems, a smart move is to shift toward event-based propagation, not full-table syncs.

Final Thoughts

Sustainable DB scaling in ecommerce doesn’t come from sheer infrastructure power. It comes from careful design. This includes query-aware data modeling, proactive archiving, multithreaded job execution, and real-time syncing through CDC.

Each choice plays a role in ensuring long-term system performance and strength. Many problems related to scale are signs of structural inefficiency. Fix those first, and your infrastructure will scale better, while saving money and reducing complexity.

If you’re dealing with similar architectural challenges or preparing for growth, we’re ready for a chat.

FAQ

-

How do you make a DB scalable?

Start by designing efficient, simple data models and archiving data you no longer need during real-time operations. Use caching to reduce read load, run background jobs in parallel, and set up Change Data Capture (CDC) for quick, lightweight data synchronization between services.

-

What is the difference between SQL and NoSQL database scaling?

SQL databases usually depend on vertical scaling or complicated sharding for performance as they grow. NoSQL databases are designed for horizontal scaling, which makes it easier to spread data across nodes. They have more flexible schemas that work well for unstructured or quickly changing data.

-

What is the main purpose of scaling?

Scaling helps your system handle more load, whether from additional users, larger datasets, or more complexity, without slowing down. It ensures availability and stability as the platform grows and demands on the infrastructure increase.

With insights gained from working closely with enterprise clients and technical leaders, Alex Bolshakova, Chief Strategist for Eсommerce Solutions at Expert Soft, shares best practices for scaling ecommerce databases without running into performance bottlenecks.

New articles

See more

See more

See more

See more

See more