9 Common Data Migration Challenges and How to Avoid Them

Data migration is rarely “just technical.” It’s also a strategic initiative that touches customer trust, business continuity, and compliance obligations. A single misstep can mean customers lose access, transactions fail, or compliance gaps appear, disrupting essential operations.

Data migration risks often stem from mismatches that only become visible under real conditions: column type conversions that cut off precision, time zone offsets that shift financial postings, regenerated IDs that break referential integrity. These data migration issues bypass test runs and appear later as corrupted reports, failed transactions, or compliance gaps.

At Expert Soft, our perspective comes from handling migrations across high-load ecommerce, enterprise systems, and cloud infrastructures. We know that success depends as much on avoiding mistakes as it does on following best practices. This article shares nine challenges you can encounter and how to sidestep them.

Quick Tips for Busy People

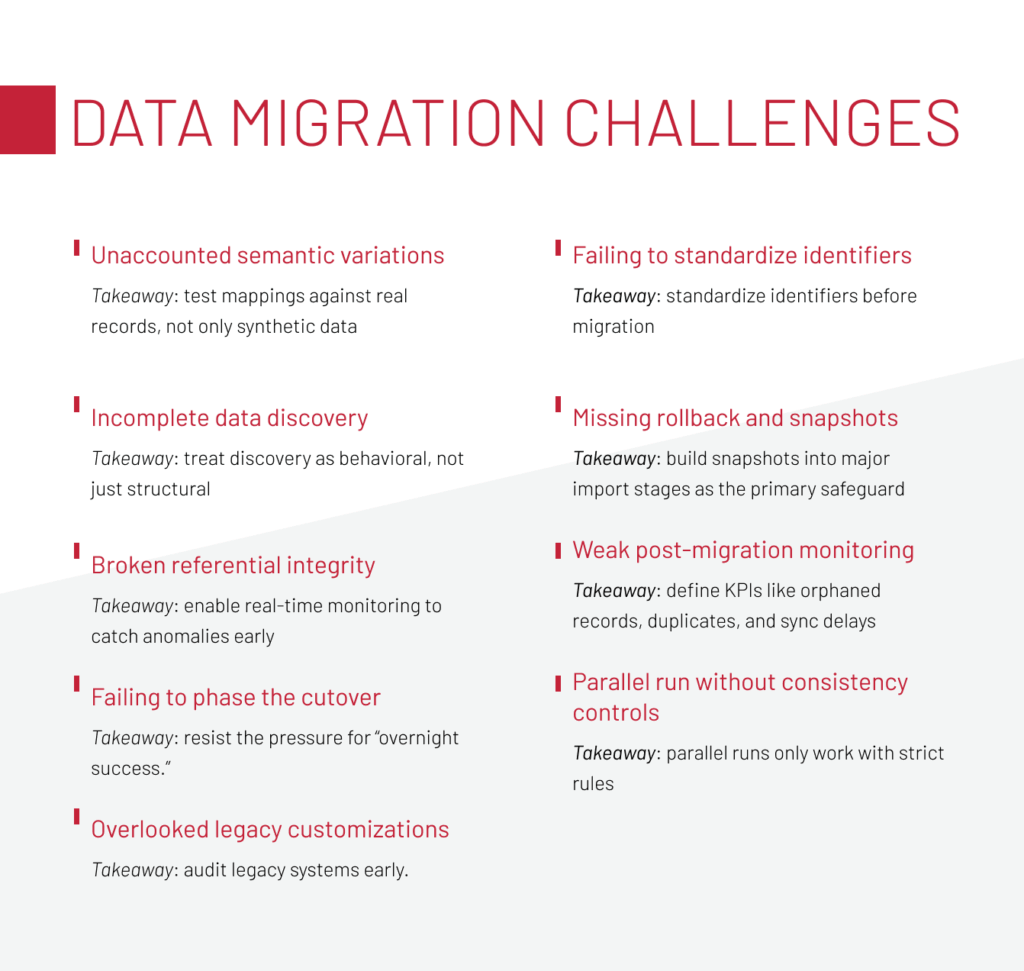

In case you only have time for the headlines, here are nine data migration challenges that consistently derail migration projects and what they lead to:

- Assume identical fields mean identical data: it leads to misapplied taxes and loyalty errors.

- Rely only on surface-level schema checks: hidden rules break CMS logic and campaigns.

- Overlook relationships when moving large datasets: this creates orphaned records and corrupted reports.

- Run parallel systems without consistency controls: customers see mismatched balances.

- Cut over in one big bang: one small error cascades into a system-wide outage.

- Leave legacy customizations undocumented: critical workflows collapse under new vendor logic.

- Fail to standardize identifiers: duplicates and wrong segmentation in marketing and reporting.

- Skip rollback mechanisms: errors become permanent once the legacy is decommissioned.

- Stop monitoring after go-live: issues appear only under real traffic and damage trust.

Each of these challenges plays out differently in practice. Below, we break them down with context, cases, and ways to address them.

Core Risks of Data Migration

Say, a company prepares for a go-live weekend. Scripts pass, dashboards are green, sign-off follows. On Monday, customers can’t log in, orders are mispriced, and consent records are missing.

This is how systemic risks in data migration usually reveal themselves: in production, under real load and scrutiny. They’re gaps in assumptions, validation, or governance. Here are the ones that matter most:

-

Data corruption

A single transformation error can ripple across thousands of records. According to Gartner, poor data quality drains an average of $12.9 million annually from organizations. During migration, the chance of introducing this type of corruption increases sharply.

-

Loss of business logic

Many systems encode rules directly in data values. “0” may mean “inactive” in one application, but something entirely different in another. These rules need to be documented and tracked during migration. Otherwise, workflows in the target system will fail as soon as it goes live.

-

Operational disruption

Downtime during migration is expensive. Even a short cutover gap can freeze revenue streams and interrupt critical processes. Uptime Institute’s 2023 Outage Analysis shows that 70% of IT service outages now cost more than $100,000, and more than 25% exceed $1 million. In migration scenarios, incomplete or delayed cutovers are among the most common triggers for these high-impact outages.

-

Regulatory exposure

It’s in migration projects that hidden compliance issues usually appear. If sensitive data moves outside the rules of GDPR, HIPAA, or PCI DSS, penalties follow. For instance, in 2022, GDPR fines alone reached more than €1.64 billion.

-

Loss of trust

When customers see incorrect balances, canceled orders, or wrong invoices, their confidence in the business takes a hit that can’t be repaired by a quick fix. The 2022 State of CRM Data Management found that 44% of organizations lose more than 10% of annual revenue because of poor data quality. These numbers show how closely trust and revenue are tied.

-

Loss of data

Once a legacy system is switched off, there’s no way back. Without snapshots or rollback mechanisms, corrupted data becomes the new reality. At that point, recovery efforts can only patch symptoms, not restore clean foundations.

The systemic risks set the stage for what can go wrong. How those risks emerge in practice depends on the type of migration. The next section looks at these type-specific challenges.

Facing a high-load migration? Talk to our team to discuss your particular case.

Start conversationType-specific Migration complexities

Not all migrations are created equal. Moving from on-premise to cloud has a different flavor of problems than switching cloud providers, and both look nothing like a platform upgrade. The risks overlap, but each path comes with its own hidden traps.

On-premise to cloud

The promise of the cloud is scalability and flexibility, but the transition often exposes assumptions baked into legacy systems:

- LAN-tuned jobs slow down over the internet,

- local security rules don’t fit cloud standards, and

- legacy middleware has no cloud equivalent.

A luxury jewelry brand discovered this the hard way when order-processing jobs that ran smoothly on their internal servers slowed to a crawl after migration. The culprit wasn’t the cloud itself but missing database indexes and inefficient queries that had never been an issue at LAN speed. Only after careful tuning and re-indexing did performance stabilize.

What makes these problems worse is a persistent misconception: workloads will simply run faster in the cloud. In reality, cloud infrastructure doesn’t smooth out inefficiencies, but changes the rules entirely. Latency, indexing, and workload distribution alter how batch jobs behave, sometimes in unpredictable ways.

A global retailer learned this when nightly cron jobs that had always run reliably on-premise became bottlenecks in a distributed environment, with some failing outright. Recovery meant re-indexing databases, optimizing queries, and redesigning job schedules to align with cloud realities.

The lesson is clear: cloud migrations require more than lift-and-shift thinking. Re-engineer jobs for distributed environments, test workloads under real conditions, and plan for latency to reshape performance.

Cloud to cloud

At first glance, a move between cloud providers looks easier. After all, the data is already online. But the devil is in the dependencies. Services often rely on vendor-specific features — a queueing system here, a compliance configuration there — that don’t exist in the new environment.

Then there’s cost. While core cloud infrastructure might look cheaper, fees for APIs, data transfers, or cross-region traffic can quietly climb. A finance team expecting savings can end up asking hard questions when monthly bills come in higher than before.

The safest approach is to assume that “cloud parity” is never perfect. Every provider has quirks, and planning for those differences up front avoids unpleasant surprises later.

Database and platform upgrades

Platform upgrades are often underestimated because they feel routine: a version bump, a new release, maybe some updated documentation. In practice, they’re where years of undocumented customizations come back to life. Upgrades tend to expose old shortcuts. Undocumented overrides stop working with new vendor logic, deprecated functions interrupt daily workflows, and differences between test and production environments let problems slip through.

During an SAP Commerce upgrade, a leading European beauty retailer ran into issues when two misaligned test environments caused failures in Bloomreach search and ASM. Resolving them required custom adaptations to restore stable functionality.

The key takeaway is that upgrades are less about “new features” and more about managing legacy baggage. Without a full audit of existing customizations, surprises are inevitable.

We’ve looked at risks tied to specific migration types. Now it’s time to step back and cover the broader challenges that appear across almost every project: the common traps, as well as a few less obvious ones that can be just as damaging.

9 Common and Not-So-Common Migration Challenges

The following nine cases come directly from real migration projects we’ve worked on or analyzed. Each shows where teams most often run into trouble and the concrete steps that helped resolve it.

1. Unaccounted semantic variations

At a global jewelry retailer, something as small as a country code caused disruption. One system stored Turkey as “TR,” another as “TUR.” The migration team assumed those fields were aligned, so no special mapping was built. Once customers began placing orders, loyalty balances were calculated incorrectly, taxes were applied to the wrong groups, and the support desk was flooded with complaints. QA testing never flagged the issue because synthetic data didn’t cover real-world values.

Semantic conflicts like this are among the most deceptive pitfalls in migration. Fields look identical at the schema level, but their meanings diverge across applications. Without explicitly documenting these meanings, mismatches slip through until production.

-

Takeaway:

Build semantic mapping matrices that describe not just names but the meaning, range, and business role of each field. Always involve domain experts who understand the business rules behind the numbers. DBAs alone can’t guarantee semantic accuracy.

2. Incomplete data discovery

Migration discovery often stops at the surface when teams record field names and formats, but overlook the business logic hidden in the data. That logic can be critical: CMS restrictions, fallback values, deprecated fields still in use, or priority rules that shape how data behaves. For example, in one system, an empty “Region” field can be interpreted as “Default Country,” while the target platform reads it as Null.

A European beauty retailer ran into issues when undocumented CMS restrictions were missed during discovery. After migration to Spartacus, components behaved inconsistently: content blocks failed to render the same way across storefronts, promotions disappeared, and banners duplicated. The problem traced back to legacy Hybris CMS restrictions that hadn’t been documented. Resolution required removing the hidden CMS restrictions, updating component specifications, and improving dependency documentation to align old and new environments.

-

Takeaway:

Treat discovery as behavioral, not just structural. Run pilot extractions with real data, trace how fields interact with business logic, and document dependencies. Without this depth, migrations only carry over the surface while leaving the hidden rules behind.

3. Broken referential integrity

Consider this scenario: a multinational healthcare provider migrated its product catalog across multiple regions. For years, local teams had reused the same product IDs for different items. In the legacy setup, this overlap never caused much trouble. But once the data was centralized, the same IDs collided. Orders were linked to the wrong products, customer records referenced IDs that no longer existed, and financial reports became unreliable overnight.

Referential integrity is fragile in bulk loads. When entity relationships are ignored, cascading failures spread quickly: orders without customers, customers without accounts, promotions attached to the wrong products. Cleaning up afterwards means combing through thousands of corrupted records.

-

Takeaway:

In the case above, the issue was solved by introducing centralized ID generation to enforce uniqueness, applying strict load order (categories → products → promotions → orders), and adding rollback checkpoints. Teams can also strengthen protection by running automated integrity validation before each batch and enabling real-time monitoring to catch anomalies early.

4. Parallel run without consistency controls

When migrations aim to reduce cutover risk, parallel runs are common: old and new systems operate side by side until stability is confirmed. It sounds safe, but if both are live and writing data, drift creeps in.

During the coexistence of JSP and Spartacus storefronts, a regional retailer discovered the issue through loyalty points. Customers logged into the legacy storefront saw one balance, while on the new storefront, another. Updates made in one system didn’t sync consistently to the other. The longer both systems ran, the wider the drift grew.

-

Takeaway:

Parallel runs only work with strict rules. Define one master source of truth, synchronize changes in real time, and log reconciliations. In the case above, the loyalty point mismatches were resolved by implementing a centralized sync service with change logging and conflict resolution. If you can’t maintain that discipline, shorten the overlap period.

5. Failing to phase the cutover

Switching everything in a single cutover feels efficient: one night of disruption, and you’re done. But in a big-bang cutover, small errors scale instantly, leaving the entire system unstable.

-

Takeaway:

Resist the pressure for “overnight success.” Instead, opt for a phased approach. In our project, we’ve seen better outcomes when exporting data from both legacy and new systems, merging and validating before processing. This approach prevented order duplication and ensured continuity before fully switching off the old system.

6. Overlooked legacy customizations

A manufacturer of medical equipment ran straight into this issue during a platform upgrade. Undocumented overrides, written years earlier as “temporary” fixes, clashed with the vendor’s updated logic. Order routing collapsed, critical workflows stalled, and the rollout had to be paused. Stabilizing the system meant building a complete catalog of legacy customizations and refactoring them, a process that delayed the project by weeks.

These failures happen because businesses accumulate hidden baggage. Over time, quick fixes, emergency patches, and silent overrides pile up. Without an audit, they surface at the worst possible time.

-

Takeaway:

Audit legacy systems early. Static code analysis can flag some risks, but manual review is just as important. Customizations need to be identified and managed deliberately. In the case above, the solution involved building a complete catalog of overrides, refactoring critical modules to align with vendor standards, and introducing alerts to flag outdated files during future upgrades.

7. Failing to standardize identifiers

Identifiers, like customer IDs, product codes, and country codes, look simple but carry business-critical meaning. The real mistake is assuming they’re consistent across systems. In reality, the same code can refer to different entities, or different codes can represent the same one. When that happens, tax gets applied incorrectly, duplicate records appear, and reporting becomes corrupted.

-

Takeaway:

Standardize identifiers before migration. A master reference dictionary with translation layers prevents misclassifications and ensures reports and processes remain accurate.

8. Missing rollback and snapshots

A healthcare provider avoided corrupted imports by snapshotting every migration batch into Redis queues. When an import failed, they rolled back instantly. Without that safeguard, corrupted data would have gone live and become permanent.

Sometimes teams skip rollback to save time, treating it as a safeguard they can afford to sacrifice under tight deadlines. But once a legacy system is decommissioned, there is no second chance. Errors in the new system become irreversible, and remediation costs multiply.

-

Takeaway:

Build snapshots and rollbacks into every major import stage as the primary safeguard against irreversible corruption.

9. Weak post-migration monitoring

Successful migration logs don’t guarantee clean data. Issues like schema drift, duplicate orders, or mismatched balances often appear only under real user traffic. That was the case for a financial services firm. On day one of their migration, system logs showed success. Within hours of live traffic, dashboards flagged schema drift and anomalies in transaction payloads. Left undetected, those errors would have hit customer accounts. Instead, real-time monitoring caught them early.

This case showed that migration isn’t over when data loads. Issues emerge only when users interact with the system at scale. Monitoring is not a final task but an ongoing discipline.

-

Takeaway:

Define KPIs like orphaned records, duplicates, and sync delays. Use automated anomaly detection backed by business team validation. Continuous monitoring ensures data quality remains intact after go-live.

The nine cases highlight how migration failures come from very different angles: from identifiers and rollback gaps to overlooked customizations. Taken together, the picture can feel overwhelming. To make it actionable, we’ve condensed these lessons into a simple set of do’s and don’ts that any migration team can use as a quick reference.

Do’s and Don’ts for Successful Migration

Here’s the framework of practices that keep migrations on track, reduce data migration risk, and the shortcuts that almost always backfire.

Final Words

Data migration is a chain of decisions that affect integrity, performance, compliance, and ultimately customer trust. Projects fail when hidden assumptions go untested, when governance is missing, or when monitoring stops too early.

The challenges we outlined come from real projects where delays, outages, or broken logic became business risks. The organizations that succeed are those that plan for these risks in advance, enforce clear rules, and treat migration as a program of governance rather than a single technical task.

If you are planning or overseeing a migration and want to discuss how to avoid these pitfalls in practice, our team is open to sharing experience from complex projects across industries. Let’s have a chat!

Through her work shaping data-driven strategies for large enterprises, Alex Bolshakova, Chief Strategist for Ecommerce Solutions at Expert Soft, highlights common data migration challenges and how to navigate them without disrupting business operations.

New articles

See more

See more

See more

See more

See more