6 Common Caching Mistakes That Can Slow Down Your Ecommerce Site

Caching is meant to speed things up, but when misconfigured, it does the opposite. We at Expert Soft have seen ecommerce platforms serve outdated prices, miss promotion deadlines, or slow to a crawl under peak traffic — all because of subtle caching issues hidden beneath the surface.

These mistakes aren’t rare. In fact, they’re surprisingly common, especially in large-scale systems with complex architecture and multiple cache layers. Based on what we’ve encountered across high-traffic projects, this article breaks down six of the most frequent caching problems, the damage they cause, and the fixes that help you make your platform faster and more efficient.

Quick Tips for Busy People

Here’s a brief overview of the main challenges:

- Data inconsistency between cache layers. The more layers you have, the higher the risk of data mismatches that break consistency across your entire platform.

- Incorrect caching invalidation. Without proper invalidation, outdated discounts, expired promotions, or inaccurate prices can remain visible long after they should disappear.

- Relying only on traditional caching approaches. Some pages can combine elements that require custom caching strategies. Caching everything may result in either outdated information or slow pages.

- Inefficient cache implementation. Caching rarely used or low-impact data while skipping high-demand content overloads your system and fails to boost speed where it counts.

- Use of outdated caching mechanisms. As your platform grows and changes, legacy caching configurations can drag down performance instead of keeping things fast and responsive.

- Lack of cache monitoring. Without tracking cache performance, issues like low hit rates or database overload go unnoticed until they affect users.

The following is a detailed breakdown of caching problems and solutions.

Common Caching Mistakes

Adding caching to an ecommerce platform isn’t rocket science. The real challenge starts when it needs to be invalidated correctly and tuned to actually boost performance, not quietly sabotage it. The mistakes below are exactly about that.

1. Data inconsistency between cache layers

When a system uses several layers of caching, which is pretty common for high-load enterprise ecommerce platforms, the challenge kicks in when data from one layer starts clashing with another.

Suddenly, a customer might add an item to the cart at one price, only to see a completely different price during checkout. It’s a quick way to lose trust. So, when Expert Soft’s client — a global beauty retailer — faced the same issue, we had to resolve it as quickly as possible.

We got to work tracing the data flow across all caching layers in a controlled test environment to identify where updates were being lost and adjust the caching rules to ensure accurate product information display.

And the more cache layers you stack, the worse it gets. Think browser, CDNs, and front-end caching, back-end cache in SAP Commerce Cloud, and external caches such as Redis. Without a well-orchestrated strategy, it can be virtually impossible to maintain real-time accuracy.

That’s why you need strict caching policies, with clearly defined TTLs and invalidation rules. You also need to make smart decisions about what stays cached longer and what should refresh more frequently.

One solid practice we recommend is using API versioning with hashed cache keys to avoid accidental conflicts. A straightforward method is to add a version tag to the cache key, like

cache_key = f"product_price:{product_id}:v2",

while ensuring cache-coordinated invalidation policies keep all layers updated.

2. Incorrect caching invalidation

Let’s start straight with a common example from practice. The beauty retailer we mentioned earlier was gearing up for a major holiday sale. Discounts were set, banners updated, and the countdown had begun. But when the sale went live, for customers nothing changed and they saw outdated information.

This is a common issue in ecommerce when the cache isn’t refreshed on time and there’s no proper update validation in place, leading to outdated data showing up, even the actual content has already changed.

In the client’s case, we fixed this by performing a detailed investigation and adding a custom solution in the form of a “no-cache” flag to the CMS component responsible for promotions, ensuring they were activated on schedule.

For less custom-heavy cases, to avoid this mistake you can:

-

Use automatic cache invalidation mechanisms

that trigger when CMS or back-end data changes. For example, you can configure invalidation using Redis Pub/Sub like this: redis.publish("invalidate_cache", product_id). All services subscribed will immediately refresh the outdated data.

-

Apply a "cache warming" strategy

to preload updated content after the cache is cleared. Right after invalidation, send a background API request and load fresh data directly into the cache. That way, users always get up-to-date content without delay.

3. Relying only on traditional caching approaches

Where caching truly demands expertise is in the fact that not everything should be cached the same way, especially when it all lives on a single page. Static elements like product descriptions and images are safe to store for longer, but dynamic content, such as inventory levels or personalized pricing, must be updated in real time. Trying to cache a page like that with a one-size-fits-all approach risks either showing users outdated information or constantly invalidating the cache, adding unnecessary load.

The Product Details Page is a textbook case. It blends static and dynamic elements into one interface, making it sensitive to caching missteps. The solution lies in precision: cache what’s safe, update what’s critical.

When we faced this challenge on a PDP for a major telecom provider, we broke the page down into modular components and applied tailored caching logic to each. Static sections were safely cached, while dynamic data was handled through optimized queries and real-time retrieval.

One of the key improvements was integrating Solr on the PDP — an uncommon move, as Solr is typically reserved for category or search pages. By using Solr to fetch product variant information, we accelerated access to the most data-heavy parts of the page.

On top of that, we reworked the Flexible Search queries, cutting down execution times and improving back-end responsiveness. These optimizations cut PDP load times by 8x while preserving accurate product details and seamless performance.

4. Inefficient cache implementation

While caching is meant to boost performance, applying it indiscriminately can not only consume unnecessary memory but even slow down response times. Misjudging what to cache or not leveraging caching enough often leads to missed opportunities for system optimization.

For example, a global healthcare tech firm’s system reloaded thousands of configuration parameters for every request, even though most values rarely changed. This constant scanning put heavy pressure on the database and led to performance degradation.

Since the data was rarely updated, it was a clear signal that caching could bring value here. By analyzing query patterns, we implemented adaptive caching at the API level, storing frequently used settings in memory with adjustable TTLs.

This back-end optimization solution eliminated redundant database scans and cut response times, allowing the platform to support more users without performance drops.

However, just caching data isn’t enough. When cache memory is limited, the system must decide which data to remove when new data arrives. This is where cache eviction policies come in:

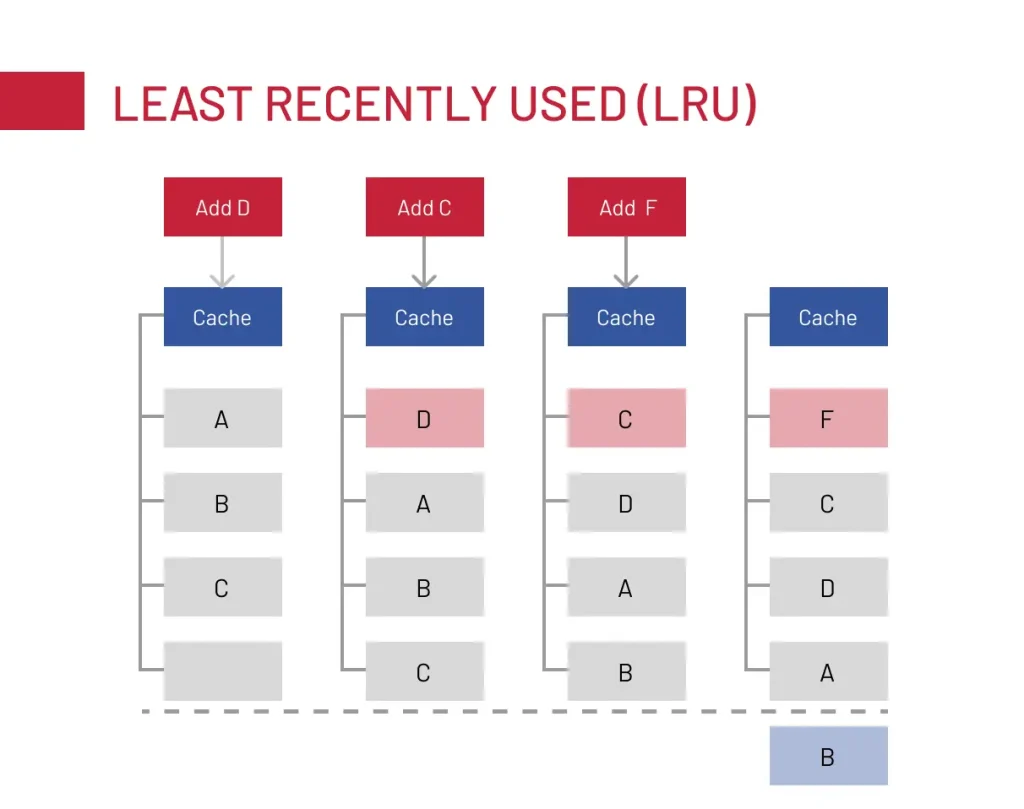

- Least Recently Used (LRU) — removes the least recently accessed items first, best for data with temporal locality, where recently accessed data is likely to be needed again soon. For example, items that have been recently interacted with should stay in the cache longer.

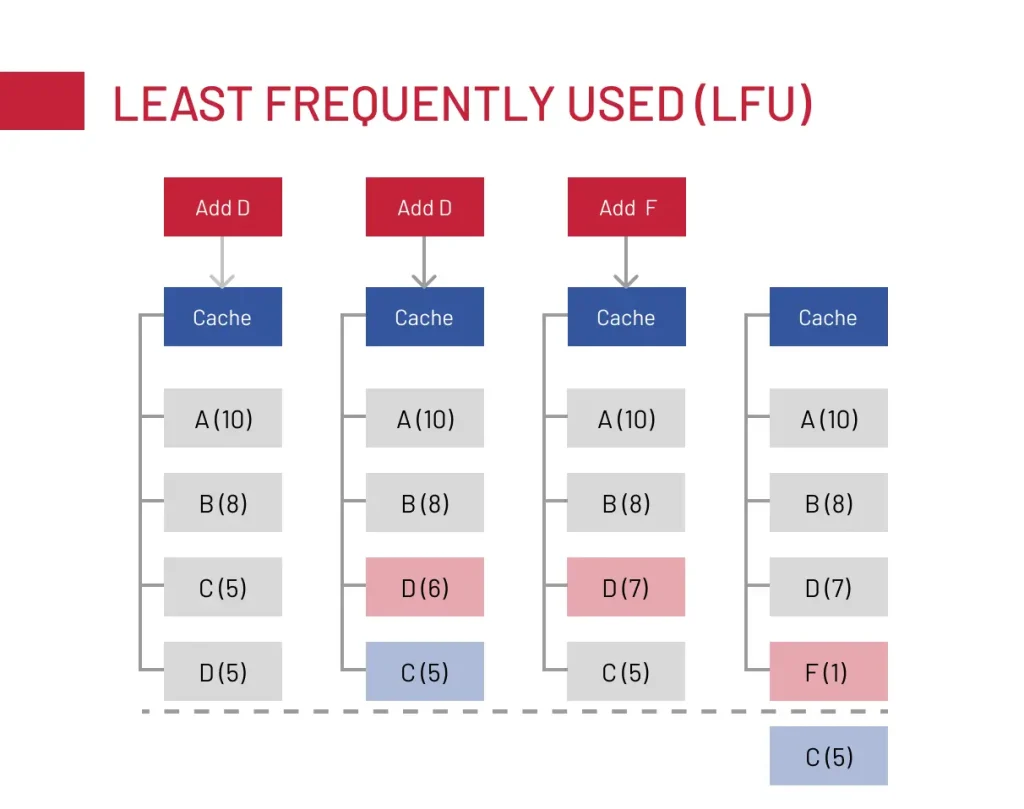

- Least Frequently Used (LFU) — removes the least accessed items over time. This approach works well for long-term relevance-based caching, where some items remain consistently popular, such as homepage banners or top-selling products.

To sum up, if recency matters, e.g., user sessions, browsing history, then choose the LRU approach. However, if the frequency is in your focus, opt for LFU. Some systems, such as Redis, support LFU-based LRU hybrid eviction, balancing both recency and frequency.

5. Use of outdated caching mechanisms

The rule is simple: what worked for caching yesterday might be dragging your platform down today. As your ecommerce website ages, evolving system architecture and modifying data relationships can render legacy caching solutions useless, or even counterproductive.

One of our clients ran into this exact website cache issue. Their platform was underperforming due to outdated caching logic and a build-up of redundant queries and code dependencies that no longer matched the system’s current structure.

We examined their caching configuration and identified inefficiencies that were hurting performance. By refactoring outdated policies, removing duplicate queries, and streamlining data fetches, we reduced database load and enhanced response times.

To keep the system healthy moving forward, we also introduced real-time cache usage logging and built mechanisms for efficient load handling during peak traffic, preventing future slowdowns before they start.

6. Lack of cache monitoring

Caching isn’t a “set it and forget it” mechanism. At the very least, it requires constant monitoring. Without real-time oversight and regular adjustments, it can do more harm than good, causing data inconsistencies, improper expiration, and unnecessary resource consumption.

Improper TTL values are the most common pitfalls. If the TTL is too low, the cache refreshes too often, defeating its purpose. If it’s too high, users get stuck with outdated results. The solution here is Dynamic TTLs that vary by data type with frequently changing stock and prices having shorter updates, while static resources like images cache for longer.

There is a simple rule that helps to define the right TTL — the rule of thumb for TTL configuration:

- Static content (images, product descriptions, general CMS content) — high TTL, from hours to days.

- Semi-dynamic content (pricing, stock levels, user sessions) — medium TTL, from minutes to hours.

- Highly dynamic content (live inventory, personalized recommendations, flash sales data) — low TTL, from seconds to minutes.

Tools like Datadog or Prometheus help monitor cache hit ratios and TTL efficiency, while real-time alerts catch issues before they impact users.

To Sum Up

Caching can do wonders for ecommerce performance, but only when done right. Missteps like unsynchronized cache layers, poorly tuned TTLs, and outdated invalidation logic often turn those promising speed gains into frustrating bottlenecks.

Resolving these issues means using hybrid caching, real-time monitoring, and adaptive TTLs to prevent stale data, redundant queries, and performance degradation, keeping your data fresh and your platform fast.

Every issue covered in this article is something Expert Soft’s team tackled firsthand on high-traffic ecommerce platforms. So if caching issues are holding your system back, we know exactly how to fix them and are here to help. Let’s talk.

FAQ

-

What are common cache issues?

Common website caching issues include stale content from incorrect invalidation, not aligning caching strategies with specifics of content, and data inconsistency between several layers. Inefficient caching implementation strains databases, while outdated mechanisms create performance bottlenecks.

-

What is the best caching strategy?

The optimal strategy varies with needs, but common approaches include cache-aside, where the application checks the cache first, then the database, and write-through, where data is written to both cache and database simultaneously. Combining strategies are crucial.

Andreas Kozachenko, Head of Technology Strategy and Solutions at Expert Soft, has over 15 years of experience optimizing ecommerce performance. His deep technical expertise helps identify and prevent caching pitfalls that can impact speed, scalability, and user experience.

New articles

See more

See more

See more

See more

See more